General rights

Copyright and moral rights for the publications made accessible in the public portal are retained by the authors and/or other copyright

owners and it is a condition of accessing publications that users recognise and abide by the legal requirements associated with these rights.

Users may download and print one copy of any publication from the public portal for the purpose of private study or research.

You may not further distribute the material or use it for any profit-making activity or commercial gain

You may freely distribute the URL identifying the publication in the public portal

If you believe that this document breaches copyright please contact us providing details, and we will remove access to the work immediately

and investigate your claim.

Downloaded from orbit.dtu.dk on: Aug 26, 2022

Cognitive componets of speech at different time scales

Feng, Ling; Hansen, Lars Kai

Published in:

Twenty-Ninth Meeting of the Cognitive Science Society (CogSci'07)

Publication date:

2007

Document Version

Publisher's PDF, also known as Version of record

Link back to DTU Orbit

Citation (APA):

Feng, L., & Hansen, L. K. (2007). Cognitive componets of speech at different time scales. In Twenty-Ninth

Meeting of the Cognitive Science Society (CogSci'07) (pp. 983-988) http://www2.imm.dtu.dk/pubdb/p.php?4871

Cognitive Components of Speech at Different Time Scales

Ling Feng (lf@imm.dtu.dk)

Informatics and Mathematical Modelling

Technical University of Denmark

2800 Kgs. Lyngby, Denmark

Lars Kai Hansen (lkh@imm.dtu.dk)

Informatics and Mathematical Modelling

Technical University of Denmark

2800 Kgs. Lyngby, Denmark

Abstract

Cognitive component analysis (COCA) is defined as unsu-

pervised grouping of data leading to a group structure well-

aligned with that resulting from human cognitive activity. We

focus here on speech at different time scales looking for pos-

sible hidden ‘cognitive structure’. Statistical regularities have

earlier been revealed at multiple time scales corresponding to:

phoneme, gender, height and speaker identity. We here show

that the same simple unsupervised learning algorithm can de-

tect these cues. Our basic features are 25-dimensional short-

time Mel-frequency weighted cepstral coefficients, assumed to

model the basic representation of the human auditory system.

The basic features are aggregated in time to obtain features at

longer time scales. Simple energy based filtering is used to

achieve a sparse representation. Our hypothesis is now basi-

cally ecological: We hypothesize that features that are essen-

tially independent in a reasonable ensemble can be efficiently

coded using a sparse independent component representation.

The representations are indeed shown to be very similar be-

tween supervised learning (invoking cognitive activity) and un-

supervised learning (statistical regularities), hence lending ad-

ditional support to our cognitive component hypothesis.

Keywords: Cognitive component analysis; time scales; en-

ergy based sparsification; statistical regularity; unsupervised

learning; supervised learning.

Introduction

The evolution of human cognition is an on-going interplay

between statistical properties of the ecology, the process of

natural selection, and learning. Robust statistical regularities

will be exploited by an evolutionary optimized brain (Barlow,

1989). Statistical independence may be one such regularity,

which would allow the system to take advantage of factorial

codes of much lower complexity than those pertinent to the

full joint distribution. In (Wagensberg, 2000), the success of

given ‘life forms’ is linked to their ability to recognize in-

dependence between predictable and un-predictable process

in a given niche. This represents a precision of the classical

Darwinian paradigm by arguing that natural selection sim-

ply favors innovations which increase the independence of

the agent and un-predictable processes. The agent can be an

individual or a group. The resulting human cognitive sys-

tem can model complex multi-agent scenery, and use a broad

spectrum of cues for analyzing perceptual input and for iden-

tification of individual signal producing processes.

The optimized representations for low level perception are

indeed based on independence in relevant natural ensemble

statistics. This has been demonstrated by a variety of inde-

pendent component analysis (ICA) algorithms, whose rep-

resentations closely resemble those found in natural percep-

tual systems. Examples are, e.g., visual features (Bell & Se-

jnowski, 1997; Hoyer & Hyvrinen, 2000), and sound features

(Lewicki, 2002).

Within an attempt to generalize these findings to higher

cognitive functions we proposed and tested the independent

cognitive component hypothesis, which basically asks the

question: Do humans also use information theoretically opti-

mal ICA methods in more generic and abstract data analysis?

Cognitive component analysis (COCA) is thus simply defined

as the process of unsupervised grouping of abstract data such

that the ensuing group structure is well-aligned with that re-

sulting from human cognitive activity (Hansen, Ahrendt, &

Larsen, 2005). For the preliminary research on COCA, hu-

man cognitive activity is restricted to the human labels in su-

pervised learning methods. This interpretation is not compre-

hensive, however it is capable of representing some intrinsic

mechanism of human cognition. Further more, COCA is not

limited to one specific technique, but rather a conglomerate

of different techniques. We envision that efficient representa-

tions of high level processes are based on sparse distributed

codes and approximate independence, similar to what has

been found for more basic perceptual processes. As men-

tioned, independence can dramatically reduce the perception-

to-action mappings by using factorial codes rather than com-

plex codes based on the full joint distribution. Hence, it is a

natural starting point to look for high-level statistically inde-

pendent features when aiming at high-level representations.

In this paper we focus on cognitive processes in digital speech

signals. The paper is organized as follows: First we discuss

the specifics of the cognitive component hypothesis in rela-

tion to speech, then we describe our specific methods, present

results obtained for the TIMIT database, and finally, we con-

clude and draw some perspectives.

Cognitive Component Analysis

In sensory coding it is proposed that visual system is near

to optimal in representing natural scenes by invoking ‘sparse

distributed’ coding (Field, 1994). The sparse signal consists

of relatively few large magnitude samples in a background

of numbers of small signals. When mixing such indepen-

−100 −50 0 50 100 150

−60

−40

−20

0

20

40

60

x

1

x

2

−20 −15 −10 −5 0 5 10 15 20

−15

−10

−5

0

5

10

15

x

1

x

2

0 0.5 1 1.5 2 2.5 3 3.5 4 4.5 5

0

0.5

1

1.5

2

2.5

3

3.5

4

x

1

x

2

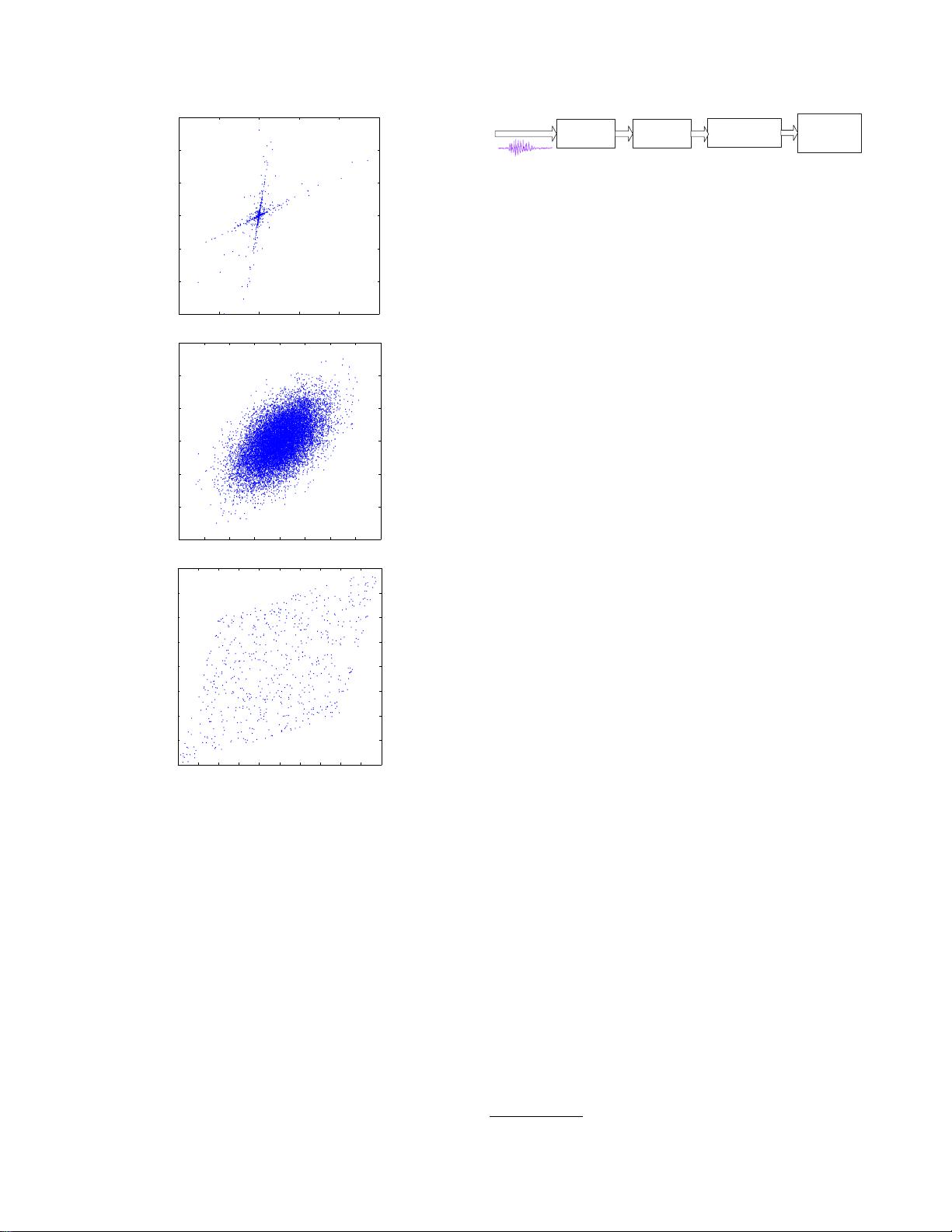

Figure 1: Prototypical feature distributions produced by a lin-

ear mixture, based on sparse (top), normal (middle), or dense

source signals (bottom), respectively. The characteristics of

a sparse signal is that it consists of relatively few large mag-

nitude samples on a background of weak signals, hence, pro-

duces a characteristic ray structure in which the ray is defined

by the vector of linear mixing coefficients: One for each for a

sparse source.

dent sparse signals in a simple linear mixing process, we ob-

tain the ‘ray structure’ which we consider emblematic for our

approach, see the top panel in Figure 1. If a signal repre-

sentation exists with a ray structure ICA can be used to re-

cover both the line directions (mixing coefficients) and the

original independent sources signals. Thus, we used ICA to

model the ray structure and represent semantic structure in

text, social networks, and other abstract data such as music

Speech signal

25 MFCCs with

20ms & 50% overlap

retains ?% energy

Energy Based

Sparsification

Principal

Component

Analysis

Fe a ture

Integration

Fe a ture

Extraction

Figure 2: Preprocessing pipeline for speech COCA. MFCCs

are extracted at the basic time scale (20ms). According to

applications, features are averaged/stacked into longer time

scales. Energy based sparsification is followed as a method

to reduce intrinsic noise. PCA on sparsified features projects

on a relevant subspace that makes it possible to visualize the

‘ray’-structure. A subsequent ICA can be used to identify the

actual ray coordinates and source signals.

(Hansen et al., 2005; Hansen & Feng, 2006). Within so-

called bag-of-words representations of text, COCA is a gen-

eralization of principal component analysis based ‘latent se-

mantic analysis’ (LSA), originally developed for information

retrieval on text (Deerwester, Dumais, Furnas, Landauer, &

Harshman, 1990). The key observation is that by using ICA,

rather than PCA, we are not restricted to orthogonal basis

vectors. Hence, in ICA based latent semantic analysis topic

vocabularies can have large overlaps. We envision that these

implemented by overlapping receptive fields can detect more

subtle differences than ‘orthogonal’ receptive fields.

Here we are going to elaborate on our earlier findings re-

lated to speech. The basic preprocessing pipeline for COCA

of speech is shown in Figure 2. First, basic features are ex-

tracted from a digital speech signal leading to a fundamental

representation that shares two basic aspects with the human

auditory system: A logarithmic dependence on signal power

and a simple bandwidth-to-center frequency scaling so that

our frequency resolution is better at lower frequencies. These

so-called mel-frequency cepstral coefficients

1

(MFCC) fea-

tures are next aggregated in time. Simple energy based filter-

ing leads to sparse representations. Sparsification is regarded

as a simple means to emulate a saliency based attention pro-

cess.

We have earlier reported our preliminary findings of ICA

ray structure related to phonemes and speaker identity in a rel-

atively small database (Feng & Hansen, 2005, 2006). Figure

3 illustrates the phoneme relevant ray structure at the basic

time scale. This analysis was carried out on four simple utter-

ances: ‘s’, ‘o’, ‘f’ and ‘a’. As shown in the figure, cognitive

components of /e/ phoneme opening ‘s’ and ‘f’ are identified.

We speculate that these phoneme-relevant cognitive com-

ponents contribute towards the well-known basic invariant

‘cue’ characteristics of speech (Blumstein & Stevens, 1979).

The theory of acoustic invariants points out that the perceived

signals are derived as stable phonetic features despite of the

different acoustic properties produced by different speakers.

Moreover Damper has shown that although the speech signal

may vary due to coarticulation, the relation between key fea-

1

For a complete description of MFCC and related cepstral coef-

ficients, see (Deller, Hansen, & Proakis, 2000).

−0.2 0 0.2 0.4 0.6 0.8 1 1.2

−0.6

−0.5

−0.4

−0.3

−0.2

−0.1

0

0.1

0.2

0.3

PC1

PC 2

SPARSIFIED FEATURES: |z| > 1.7

ssss

s

s

s

s

s

s

s

s

s

s

s

s

ssss

s

sss

s

s

s

s

s

s

s

s

s

s

s

s

s

s

s

s

s

s

s

s

s

s

s

s

s

s

s

s

s

s

s

s

s

s

s

s

ss

s

s

s

s

s

s

s

s

s

s

s

s

s

s

s

s

sssssss

s

s

s

s

s

s

s

s

s

s

s

s

s

s

s

s

s

s

s

o

o

o

o

o

o

o

o

o

o

o

o

o

o

o

ooo

o

o

o

o

o

o

o

o

o

o

ooo

o

o

o

o

o

o

o

o

o

o

o

o

o

o

o

o

o

o

o

o

o

o

o

o

o

o

o

o

o

o

o

o

o

o

o

o

o

o

o

o

o

o

o

o

o

o

ooooooo

o

oooooo

o

o

oo

o

o

o

o

o

o

o

o

o

o

o

o

o

o

o

o

o

o

o

o

o

o

o

o

oo

oo

o

oo

o

o

o

o

o

o

o

o

o

o

o

o

o

o

o

o

o

o

o

o

o

o

o

oooooooooooooooooooooooo

o

o

ooo

o

o

o

o

o

o

o

o

o

o

o

o

o

o

o

oo

o

o

o

o

o

o

o

o

o

o

o

o

o

o

o

o

f

f

f

fffff

f

f

f

f

f

f

f

f

f

ffffffffffffff

f

f

f

f

f

f

f

f

f

fffffffff

f

fffffff

f

f

f

f

f

f

ffff

f

f

f

f

f

f

f

f

ffffffffff

f

f

f

f

f

ffffffffffffffffffffffffffffffffffffffffffff

f

f

f

f

f

f

f

f

a

a

a

a

a

a

a

a

a

a

a

a

a

a

a

a

a

aaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaa

a

a

a

a

a

a

a

a

a

a

a

a

a

a

a

a

a

a

a

a

a

a

a

a

a

a

aaaaa

a

a

a

a

a

a

a

a

a

a

a

a

a

a

a

a

a

a

a

a

a

a

a

a

a

a

a

a

a

a

a

a

a

a

a

a

a

a

a

aa

a

a

a

a

a

a

a

a

a

a

s

s

s

s

s

s

s

s

s

s

s

s

s

s

s

s

s

s

s

s

s

s

s

s

s

s

s

s

s

s

s

f

f

f

f

f

f

f

f

f

ZOOM-IN

Figure 3: The latent space is formed by the two first principal components of data consisting of four separate utterances

representing the sounds ‘s’, ‘o’, ‘f’, ‘a’. The structure clearly shows the sparse component mixture, with ‘rays’ emanating from

the origin (0,0). The ray embraced in a rectangle contains a mixture of ‘s’ and ‘f’ features, a cognitive component associated

with the vowel /e/ sound.

tures follows a consistent and invariant form (Damper, 1998).

Experiments involving labels related to speaker identification

also provided the signature of linear ‘ray’-structures. Is lin-

earity related to perceptually distinguishable categories? The

discussion on linear correlations in the speech signal and lo-

cus equation is still on-going (Sussman, Fruchter, Hillbert, &

Sirosh, 1998).

During the itinerary of searching for spoken cognitive com-

ponents, we have thus already reported (Feng & Hansen,

2005, 2006) on generalizable phoneme relevant components

at a time scale of 20 ∼ 40ms, and generalizable speaker spe-

cific components at an intermediate time scale of 1000ms.

In this paper we will further expand on our findings in

speech by applying COCA on speech features at various time

scales. We will systematically investigate the performance of

unsupervised and supervised learning and test whether the

tasks are learned in equivalent representations, hence, in-

dicating consistency of statistical regularities (unsupervised

learning) and human cognitive processes (supervised learn-

ing of human labels).

Methods

Our speech analysis follows the basic preprocessing scheme

shown in Figure 2.

Feature Stacking

Since speech signals are non-stationary features have to be

extracted from short-time scales. A simple method to get fea-

tures at longer time scales is stacking or vector ‘concatena-

tion’ of signals. Figure 4 illustrates the stacking procedure

used in our experiments.

1. Truncate speech signal into overlapped frames, 20ms long

with 50% overlap;

… … …

10-40ms 10-40ms

.

.

.

25-by-1

MFCC

Feature

Extraction

25*N -by- 1

Feature Matrix

Figure 4: Speech feature extraction and stacking

2. Apply hamming window on each frame;

3. Extract MFCCs from each windowed frame, which forms

a 25-dimensional vector;

4. According to the time scale, N original 25-dimensional

MFCCs are stacked into one 25 ∗ N-dimensional vector;

5. Repeat 4 until all the frames are stacked.

25 ∗ N dimensional features representing long time scales are

then used in both supervised and unsupervised learning meth-

ods.

Mixture of Factor Analyzers

To test whether supervised and unsupervised learning lead to

similar representations we need a model that can incorporate

both. In particular we need a generative representation to al-

low unsupervised learning, and we want the representation to

allow sparse linear ray like features. This can be achieved

in a simple generalization of so-called mixture of factor an-

alyzers (MFA). The unsupervised version is inspired by the

so-called Soft-LOST (Line Orientation Separation Technique)

(O’Grady & Pearlmutter, 2004).

Factor analysis is one of the basic dimensionality reduc-

tion forms. It models the covariance structure of multi-

dimensional data by expressing the correlations in lower di-

mensional latent subspace, mathematical expression is

x = Λz + u, (1)

where x is the p-dimensional observation; Λ is the factor

loading matrix; z is the k-dimensional hidden factor vector

which is assumed Gaussian distributed, N (z|0, I); u is the

independent noise which is N (u|0, Ψ), with a diagonal ma-

trix Ψ. Given eq. (1), observations are also distributed as

N (x|0, Σ), with Σ = ΛΛ

T

+ Ψ. Factor analysis aims at es-

timating Λ and Ψ in order to give a good approximation of

covariance structure of x.

While the simple factor analysis model is globally linear

and Gaussian, we can model non-linear non-Gaussian pro-

cesses by invoking a so-called mixture of factor analyzers

p(x) =

K

∑

i=1

Z

p(x|i, z)p(z|i)p(i)dz, (2)

where p(i) are mixing proportions and K is the number of fac-

tor analyzers. MFA combines factor analysis and the Gaus-

sian mixture model, and hence can simultaneously perform

clustering, and dimensionality reduction within each cluster,

see (Ghahramani & Hinton, 1996) for a detailed review.

To meet our request for unsupervised learning model, MFA

is modified to form an ICA-like line based density model sim-

ilar to Soft-LOST by reducing the factor loadings to hold a

single column vector, i.e., the ‘ray’ vector. It uses an EM

procedure to identify orientations within a scatter plot: in the

E-step, all observations are soft assigned into K clusters de-

pending on the number of mixtures, which is represented by

orientation vectors v

i

, then it calculates posterior probabili-

ties assigning data points to lines; and in M-step, covariance

matrices are calculated for K clusters, and the principal eigen-

vectors of covariance matrices are used as new line orienta-

tions v

new

i

, by this means it re-positions the lines to match the

points assigned to them. Finally we end up with a mixture

of lines which can be used as a classifier. We purposed a su-

pervised mode of the modified MFA, which models the joint

distribution of features set x and a possible labels set y

p(x, y) =

K

∑

i=1

Z

p(x|i, z)p(z)dzp(y|i)p(i). (3)

In the sequel we will compare the performance of the two

modes of modified MFA at multiple time scales. In particu-

lar we will train supervised and unsupervised models on the

same feature set. For the unsupervised model we first train

using only the features x. When the density model is optimal

we clamp the mixture density model and train only the cluster

tables p(y|i), i = 1, ..., K, using the training set labels. This

is also referred to as unsupervised-then-supervised learning.

This is a simple protocol for checking the cognitive consis-

tency: Do we find the same representations when we train

them with and without using ‘human cognitive labels’.

Results

In this section we will present experimental results of analysis

on speech signals gathered from TIMIT database (Garofolo et

al., 1993). TIMIT is a reading speech corpus designed for the

acquisition of acoustic-phonetic knowledge and for automatic

speech recognition systems. It contains a total of 6300 sen-

tences, 10 sentences spoken by each of 630 speakers from the

United States. For each utterance we have several labels that

we think as cognitive indicators, labels that humans can infer

given sufficient among of data. While each sentence lasts ap-

proximately 3s we will investigate performance at time scales

ranging from basic 20ms to long about 1000ms. The cognitive

labels we will focus on here are phonemes, gender, height and

speaker identity. Training and test sets are recommended in

TIMIT, which contain 462 speakers reading for training and

168 for test. The total speech covers 59 phonemes, and the

heights from all speakers range from 4

′

9

′′

to 6

′

8

′′

, and have to-

tally 22 different values. In order to gather sufficient amount

of speech signals we chose 46 speakers with equal gender dis-

tribution, and speech signals cover all 59 phonemes, and all

22 heights.

Following the preprocessing pipeline, we first extracted

25-dimensional MFCCs from original digital speech signals.

To investigate various time scales, we stacked basic features

into a variety of time scales, from the basic 20ms scale up to

1100ms. Energy based sparsification was used afterwards as

a means to reduce the intrinsic noise and to obtain sparse sig-

nals. Sparsification is done by thresholding the amplitude of

stacked MFCC coefficients, and only coefficients with super

threshold energy were retained. By adjusting the threshold,

we examine the role of sparsification in our experiments. We

changed the threshold leading to a retained energy from 100%

to 41%. Unsupervised and supervised modes of MFA were

then performed respectively. To classify a new datum point

x

new

we first calculate the set of p(i|x

new

)’s and then compute

the posterior label probability.

Figure 5 presents the results of MFA for gender detection.

The two plots (a) and (b) show the error rates for the super-

vised mode of MFA for the training and test set separately,

while (c) and (d) are training and test error rates for unsu-

pervised MFA (soft-LOST). First, we note that sparsification

does play a role: when high percentage of features was re-

tained from sparsification, e.g. 100% and 99.8%, error rates

did not change much while increasing time scales, meaning

the intrinsic noise covers up the informative part, and longer

time scales do not assist to recover it. With the increasing of

time scales all the curves tend to converge at the time scale

around 400 ∼ 500ms.