Prototyping An Intelligent Agent

Through

Wizard of Oz

David Maulsby

Saul Greenberg

Richard Mander

1993

Cite as:

Maulsby, D., Greenberg, S. and Mander, R. (1993) “Prototyping an intelligent agent through Wizard of

Oz.” In ACM SIGCHI Conference on Human Factors in Computing Systems, Amsterdam, The

Netherlands, May, p277-284, ACM Press.

An earlier working paper was published as Research Report 92/489/27, Dept of Computer Science,

University of Calgary, Calgary, Canada (1992).

Prototyping an Intelligent Agent

through Wizard of Oz

David Maulsby * Saul Greenberg * Richard Mander

*

Department of Computer Science

University of Calgary

Calgary Alberta T2N 1N4 Canada

maulsby or saul@cpsc.ucalgary.ca

+01 403-220-6087

Human Interface Group, Apple Computer Inc.

20525 Mariani Ave.

Cupertino CA 90514 USA

mander@appleLink.apple.com

+01 408-974-8136

ABSTRACT

Turvy is a simulated prototype of an instructible agent. The

user teaches it by demonstrating actions and pointing at or

talking about relevant data. We formalized our assumptions

about what could be implemented, then used the Wizard of

Oz to flesh out a design and observe users’ reactions as they

taught several editing tasks. We found: a) all users invent a

similar set of commands to teach the agent; b) users learn

the agent’s language by copying its speech; c) users teach

simple tasks with ease and complex ones with reasonable

effort; and d) agents cannot expect users to point to or

identify critical features without prompting.

In conducting this rather complex simulation, we learned

some lessons about using the Wizard of Oz to prototype in-

telligent agents: a) design of the simulation benefits greatly

from prior implementation experience; b) the agent’s

behavior and dialog capabilities must be based on formal

models; c) studies of verbal discourse lead directly to an

implementable system; d) the designer benefits greatly by

becoming the Wizard; and e) qualitative data is more

valuable for answering global concerns, while quantitative

data validates accounts and answers fine-grained questions.

KEYWORDS: Intelligent agent, instructible system,

programming by demonstration, Wizard of Oz, prototyping

INTRODUCTION

We used the Wizard of Oz method to test a new design for

an instructible agent. In this paper we describe how end

users learned to teach a simulated agent called Turvy, in par-

ticular the set of instructions and commands they adopted.

These findings will be valuable to implementors of pro-

gramming by demonstration systems. We also explore the

lessons we learned using the method, which experimenters

can apply in their own studies of intelligent interfaces.

These lessons differ from other Wizard of Oz experiences in

being oriented towards prototyping an implementable sys-

tem, rather than a proof of concept.

The paper begins with a brief discussion of intelligent

agents and the Wizard of Oz method. It then describes the

Turvy experiment and results, and ends with a retrospective

on our use of Wizard of Oz.

Intelligent agents

When given a goal, [an intelligent agent] could carry out

the details of the appropriate computer operations and

could ask for and receive advice, offered in human terms,

when it was stuck. –Alan Kay (1984)

Intelligent interface agents have been touted as a significant

new direction in user interface design. Videos from Apple

and Hewlett-Packard show futuristic interfaces in which

agents play a dominant role, serving as computerized office

clerks, database guides and writing advisors. Reality is a bit

behind, but prototype intelligent agents have been

implemented by researchers. For example, Eager (Cypher,

1991) detects and automates a user’s repetitive actions in

HyperCard; it matches examples by parsing text strings and

by testing numerical relationships. Metamouse (Maulsby,

1989) learns drawing tasks from demonstrations; it applies

rules to find significant graphical constraints.

Yet today’s agents are “intelligent” in the narrowest sense

of the word. They understand only specialized or highly

structured task domains, and lack flexibility in conversing

with users. Kay (1984) suggests that agents should be

illusions that mirror the user’s intelligence while restricting

the user’s agenda. Unfortunately, because most work on

agents stems from the field of Artificial Intelligence, users’

needs are second to algorithm development. While the

systems prove that particular approaches can be codified in

algorithms, they rarely pay more than lip service to the

usability tradition of Human Computer Interaction. As a

result, they tend to fail as interfaces.

Agents must be designed around our understanding of what

people require and expect of them. However, the traditional

approach of system building is an expensive and unlikely

way to gain this understanding. The underlying discourse

models and algorithms for agents are usually so complex

and entrenched with assumptions that changes—even minor

ones—may require radical redesign. Moreover, because

agents act as intermediaries between people and their appli-

cations, the designer must craft and debug the

agent/application interface as well. A viable alternative to

system building is Wizard of Oz.

Wizard of Oz

Wizard of Oz is a rapid-prototyping method for systems

costly to build or requiring new technology (Wilson and

Rosenberg, 1988; Landauer, 1987). A human “Wizard”

simulates the system’s intelligence and interacts with the

user through a real or mock computer interface.

Most Wizard of Oz experiments establish the viability of

some futuristic (but currently unimplementable) approach

to interface design. An example is the use of complex user

input like speech. Gould et. al., (1982), who pioneered the

method, simulated an imperfect listening typewriter to find

out whether it would satisfy people used to giving

dictation. Similarly, Hauptmann (1989) tested users’

preferences for manipulating graphic images through

gesture and speech, by simulating the recognition devices.

Other Wizard of Oz experiments concentrated more on

human behavior than futuristic systems. Hill and Miller

(1988), for example, investigated the complexities of

intelligent on-line help by observing interaction between

users of a statistical package and a human playing the role

of help system. Likewise, Dahlbäck, Jönsson and

Ahrenberg (1993) studied differences between human-human

and human-computer discourse through a variety of feigned

natural language interfaces. In another experiment, Leiser

(1989) showed that people can be led to use a language

understood by the computer through convergence, a

phenomenon of human dialog in which participants

unconsciously adopt one other’s speech pattern. When users

typed a natural language database query, the Wizard, using

only certain terms and syntactic forms, would verify it by

paraphrasing. Convergence occurred when users adopted

those same terms in their queries. We will return to this

theme in our discussion of “TurvyTalk”.

THE TURVY EXPERIMENT

Our research concerns both the technical and usability

aspects of programming by demonstration. Previously

implemented systems have had problems with competence

or usability; we had in mind a more general purpose, easily

instructed system that would be nonetheless practical to

implement in the near future. The system we envisioned

starts with easily coded, primitive knowledge of datatypes

and relations, and more specialized knowledge defined by

application authors. It then learns higher-level constructs

and procedures specific to the individual user’s work. For

practicality, it must learn under the user’s guidance, so it

needs an intuitive and flexible teaching interface. We decided

that an agent metaphor would help us explore the design

issues; the agent is Turvy.

We wanted to see how end users would teach an agent with

perfect memory and primitive knowledge. We wanted to see

how they structured lessons and whether they could focus

its attention correctly. Would they be able to translate

cultural concepts (like surname) into syntactic search

patterns (like capitalized word after Mr. or Ms.), and how

could Turvy minimize the annoyance of doing so? What

kinds of instructions and commands would they use, and

what wording? Could verbal input, based on pseudo-natural

language or even keyword-spotting, work in conjunction

with pointing? We decided to use a Wizard of Oz simulation

to investigate these issues, involving end users up front,

before making too many commitments in our design.

Using the Wizard of Oz to prototype programming by

demonstration makes unusual demands on the Wizard, and

we had some special concerns. We wanted to simulate a

“buildable” Turvy, so we designed a formal model of the

learning system and required the Wizard to obey it. We

wanted to separate the agent’s behavior (what it can

understand and how it can react) from the illusion it

presents in the interface, so we let users explore Turvy’s

abilities through discovery, finding its limits as it

responded to their own commands and teaching methods.

Finally, we wanted detailed qualitative and quantitative

results, so we designed a set of tasks to reveal interaction

problems, videotaped sessions, and interviewed the users.

Motivation: previous implementation experience

We had completed a user study of Metamouse, a fully

implemented programming by demonstration system

personified by an agent named Basil. Basil learns repetitive

graphical edits, such as aligning or sorting by height,

provided the user makes all relevant spatial relations visible

by drawing construction lines. In that study we gave users

six tasks, all of which Basil could learn in principle. But in

practice, Basil frustrated users’ attempts to teach it. First,

though they discerned the need for constructions, users did

not grasp Basil’s strict procedural interpretation, and they

sometimes used a line to suggest a relation rather than

define it. Second, users didn’t think it possible to construct

some features, like height; they would have preferred to say

it in English. Third, they were confused by aspects of the

agent metaphor concerning how it searched for sets of

objects and created branching procedures. Finally,

limitations on Basil’s inferencing ability, and occasional

crashes due to bugs, compounded their problems and made

our observations harder to assess.

We wanted to overcome these deficiencies. We wanted

people to converse with the agent through a multi-modal

dialog, using demonstrations, English, or property sheets.

We wanted people to learn through conversation (verbal or

graphical) what the agent could understand. We wanted a

metaphor that would present the right illusion. We also

knew that a reincarnated Metamouse was not the vehicle for

this study, because we would be shackling ourselves to

ideas embedded in a big system. We turned to Turvy.

Turvy as agent

We made four key assumptions about the sort of agent we

would build, each with consequences for usability. • First,

Turvy learns from a user’s demonstrations, pointing, and

verbal hints. It does not have human-level abilities or

knowledge; like Metamouse, it forms search and result pat-

terns from low-level features, and users must refer to them

during demonstrations. • Second, unlike Metamouse, Turvy

does not equate the user’s demonstration with a procedure;

actions may be interpreted as focusing attention or extend-

ing a pattern, and Turvy can revise an interpretation as more

examples are seen. This enables a dialog where users can

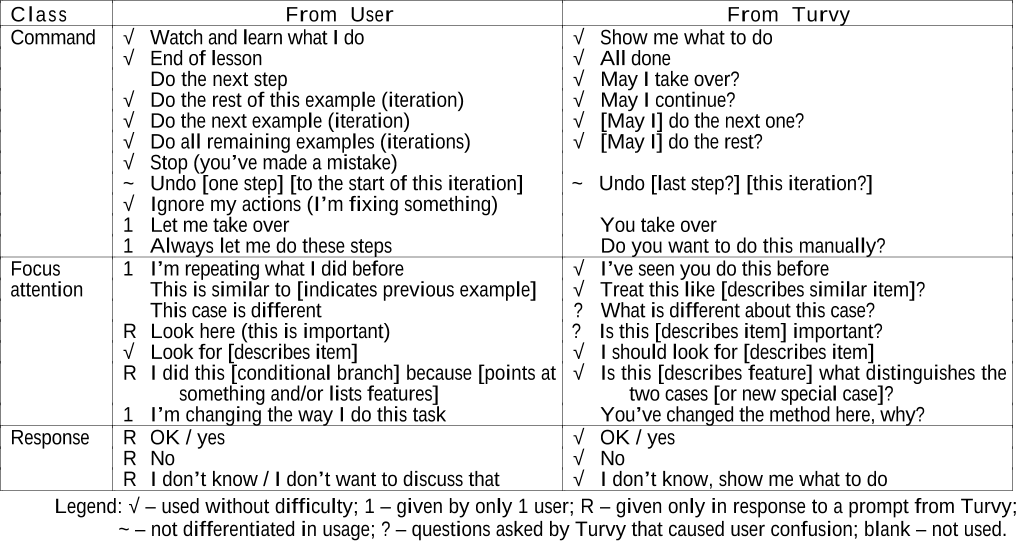

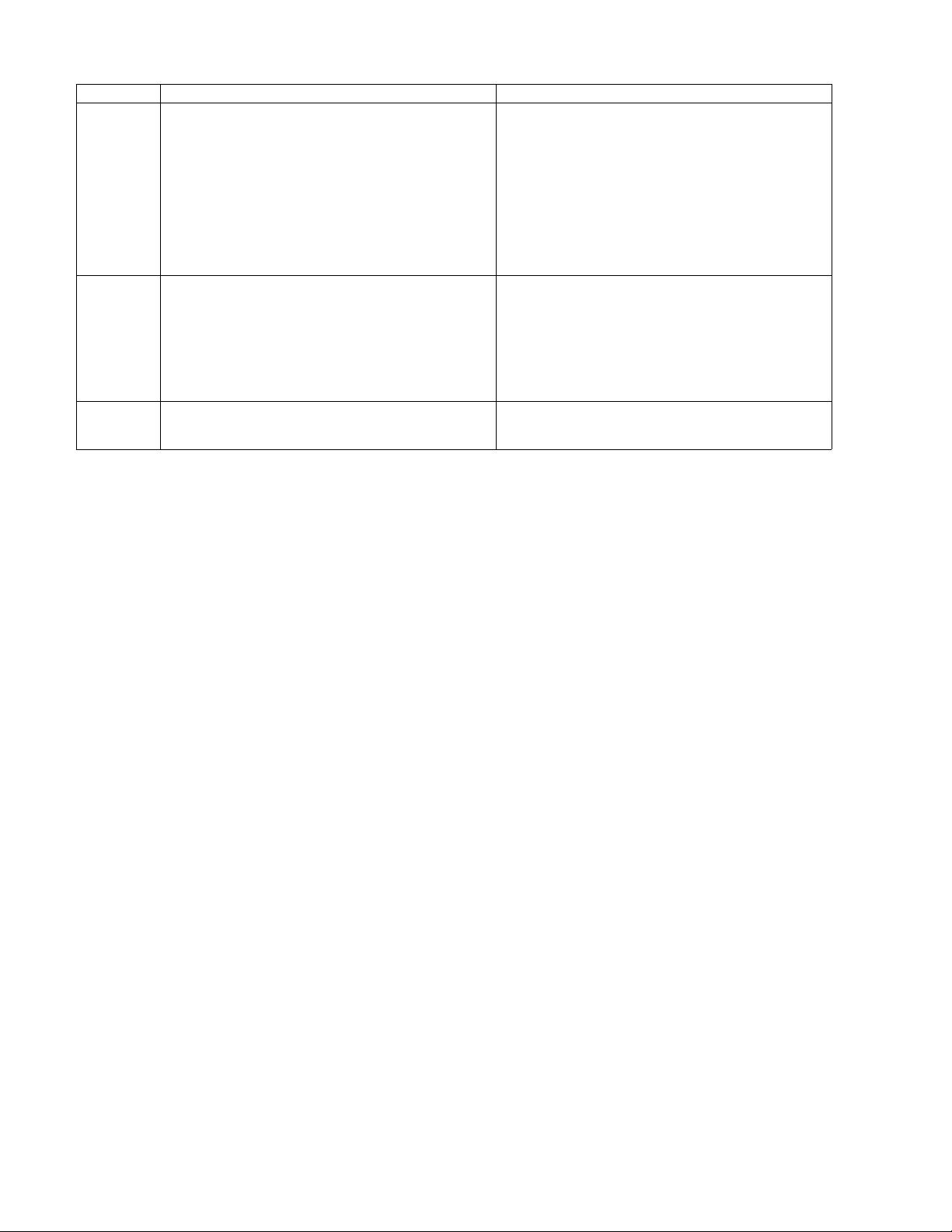

Class From User From Turvy

Command √ Watch and learn what I do √ Show me what to do

√ End of lesson √ All done

Do the next step √ May I take over?

√ Do the rest of this example (iteration) √ May I continue?

√ Do the next example (iteration) √ [May I] do the next one?

√ Do all remaining examples (iterations) √ [May I] do the rest?

√ Stop (you’ve made a mistake)

~ Undo [one step] [to the start of this iteration] ~ Undo [last step?] [this iteration?]

√ Ignore my actions (I’m fixing something)

1 Let me take over You take over

1 Always let me do these steps Do you want to do this manually?

Focus 1 I’m repeating what I did before √ I’ve seen you do this before

attention This is similar to [indicates previous example] √ Treat this like [describes similar item]?

This case is different ? What is different about this case?

R Look here (this is important) ? Is this [describes item] important?

√ Look for [describes item] √ I should look for [describes item]

R I did this [conditional branch] because [points at

something and/or lists features]

√ Is this [describes feature] what distinguishes the

two cases [or new special case]?

1 I’m changing the way I do this task You’ve changed the method here, why?

Response R OK / yes √ OK / yes

RNo √ No

R I don’t know / I don’t want to discuss that √ I don’t know, show me what to do

Legend: √ – used without difficulty; 1 – given by only 1 user; R – given only in response to a prompt from Turvy;

~ – not differentiated in usage; ? – questions asked by Turvy that caused user confusion; blank – not used.

Table 1. Messages used in the Turvy study.

teach new concepts on the fly. • Third, an implementable

Turvy would not have true natural language capabilities,

and our system only recognizes spoken or typed keywords

and phrases, using an application-specific lexicon. This

poses problems for a user of Turvy’s language, for they

must learn this lexicon. Verbal inputs are either commands

(like Stop!) or hints about features (like look for a word

before a colon), where keywords (word, colon) are compared

with actual data at the locus of action to determine their

meaning. This implies that users must accompany verbal

descriptions with editing or pointing actions. • Fourth,

Turvy predicts actions as soon as possible, verbalizing

them on the first run through. Eager prediction gives users

efficient control over learning. Speech output signals the

features Turvy has deemed important, without obscuring

text or graphic data. As a side effect, the users also learn

Turvy’s language.

Turvy as system

This section supplies a brief description of Turvy’s built-in

knowledge, inference mechanism, and interaction model.

Maulsby (1993) provides more detail. Turvy learns proce-

dures and data descriptions — specialized types, structures,

orderings (cf. Halbert, 1984). Turvy’s learning strategy is to

make a plausible generalization from one example, then

revise it as more are seen or when the user gives descriptive

hints. Turvy’s tactic is to elicit hints by predicting.

We designed a formal model of the learning system in terms

of a grammar for tasks it could learn, then chose an

application (text editing), and made a detailed model of

Turvy’s background knowledge, in the form of an attribute

grammar for textual search patterns. The knowledge is more

primitive than that in Myers’ (1991) demonstrational text

formatter. Untutored Turvy recognizes characters, words,

paragraphs, brackets, punctuation, and properties like case

and font. Turvy learns to search for sequences of tokens

with specified properties.

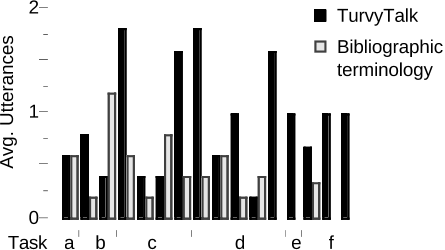

We designed a model of interaction—an incomplete model,

formulated as a list of 32 kinds of instructions implied by

the learning model. If a person gives all the instructions,

the system can learn without inferencing, but we predicted

that people would use only the subset listed in Table 1. We

believe the user will give incomplete or ambiguous

instructions, which the system will complete by making

inferences and eliciting further details. Note that we told

Turvy’s users only that it could understand “some English”;

they did not receive a list of instructions or wordings. In

turn, the Wizard as Turvy responded only to instructions

whose intention corresponded to some message in the

interaction model.

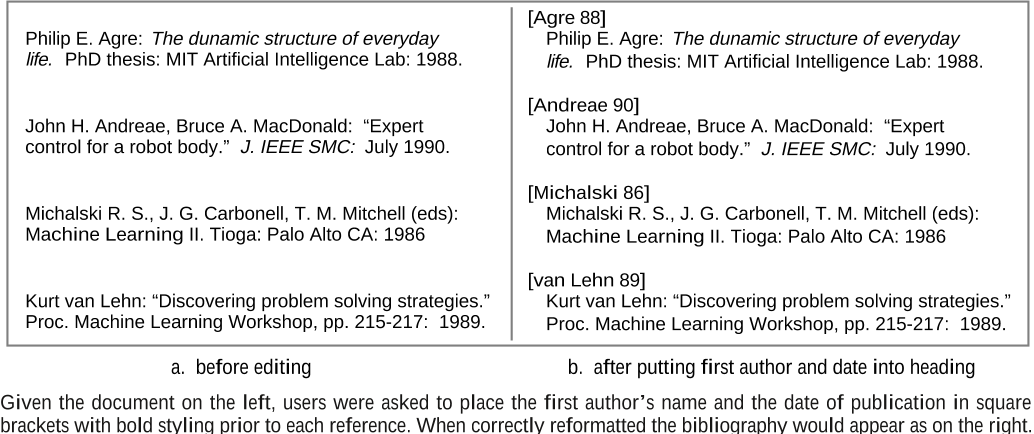

Tasks and an example user/Turvy dialog

We made up six tasks and a data set, on the theme of

formatting a bibliography:

a. italicize journal titles

b. quote the titles of conference and journal papers

c. create a citation heading with the primary author’s

surname and year of publication (illustrated in Figure 1)

d. put authors’ given names and initials after surnames

e. put book titles in Times-Roman font

f. strip out colons that separate bibliographic fields

Tasks involved domain concepts like “title,” “journal vs.

book,” and “list of authors,” concepts Turvy does not

understand. Users were shown before and after snapshots of

example data for each task; no mention was made of the

syntactic features Turvy would learn. This tested Turvy’s

ability to elicit effective demonstrations and verbal hints.

Philip E. Agre:

The dunamic structure of everyday

life.

PhD thesis: MIT Artificial Intelligence Lab: 1988.

John H. Andreae, Bruce A. MacDonald: “Expert

control for a robot body.”

J. IEEE SMC:

July 1990.

Michalski R. S., J. G. Carbonell, T. M. Mitchell (eds):

Machine Learning II. Tioga: Palo Alto CA: 1986

Kurt van Lehn: “Discovering problem solving strategies.”

Proc. Machine Learning Workshop, pp. 215-217: 1989.

a. before editing b. after putting first author and date into heading

[Agre 88]

Philip E. Agre:

The dunamic structure of everyday

life.

PhD thesis: MIT Artificial Intelligence Lab: 1988.

[Andreae 90]

John H. Andreae, Bruce A. MacDonald: “Expert

control for a robot body.”

J. IEEE SMC:

July 1990.

[Michalski 86]

Michalski R. S., J. G. Carbonell, T. M. Mitchell (eds):

Machine Learning II. Tioga: Palo Alto CA: 1986

[van Lehn 89]

Kurt van Lehn: “Discovering problem solving strategies.”

Proc. Machine Learning Workshop, pp. 215-217: 1989.

Given the document on the left, users were asked to place the first author’s name and the date of publication in square

brackets with bold styling prior to each reference. When correctly reformatted the bibliography would appear as on the right.

Figure 1. Sample data from Task c, “make citation headings”.

Similar concepts were repeated in later tasks, to see whether

users adopted Turvy’s description.

Figure 1 shows a sample of the data for Task c. The user is

supposed to make citation headings for each entry, using

the primary author’s surname and part of the date. From

Turvy’s point of view, these items are the word before the

first colon or comma in the paragraph, and the two digits

before the period at the paragraph’s end. Exceptional cases

to be learned include a baronial prefix (eg. “van Lehn”) and

initials after surname (eg. “Michalski R. S.”).

TaskC: repeat (FindSurname FindDate)

FindSurname: if find pattern (Loc := BeginParagraph

SomeText Surname := ({0 or more LowerCaseWord}

Word) [Colon or Comma])

then do MakeHeading else TurvyAllDone

MakeHeading: select Surname; copy Surname;

put cursor before Loc; type “[“; paste Surname;

type (DateLoc := Blank “]”); type Return;

select styleMenu; select “citation”

FindDate: if find pattern (Date := (Digit Digit)

Period EndParagraph)

then do CopyDate else TurvyAskUserForDemo

CopyDate: select Date; copy Date;

put cursor after DateLoc; paste Date

Figure 2. Pseudo-code derived by analyzing Task c

(from Figure 1) with Turvy’s inference model.

Prior to the experiment, we analyzed this task using the

inference model and got code similar to that in Figure 2

(we’ve omitted the code for handling initials after a

surname). Coding helps the Wizard act consistently, even if

users teach somewhat different procedures. The code loops

over all entries in the bibliography. Each step involves

finding a pattern in the text (to instantiate data descriptions)

and then executing a macro; if not found, a default action is

done. Macros are straight-line procedures that refer only to

instantiated variables. Mnemonic variable and macro names

are shown in italics.

Below is the trace of a dialog between Turvy and “Kate”, a

composite of two users in our study (the first paragraph was

spoken by the one, the rest by the other). Kate is working

through Task c: the first example is “Agre” in Figure 1.

Kate: Take the last name, it will be in some cases before

a colon.

[Kate copies “Agre”, pastes it into a new

paragraph, types the brackets and sets the style.]

This is a list of publications. Now we’re going to add

the last two digits of the date

[types “88”].

Kate gives hints about the syntax (“before a colon”, “last

two digits”) and the subject matter (“name”, “a list of

publications”). Turvy interprets only the hints about

syntax, and then only in relation to actions demonstrated

(so “last two digits” is taken to mean the digits typed, not

those at the end of the paragraph).

Kate then signals the end of the lesson and gives Turvy a

chance to take over or get another example. Turvy edits the

next entry (not shown in Figure 1).

Kate: Do you want another demo?

Turvy: I can try if you want. —

Kate: OK.

Turvy: Looking for word before first colon in next

paragraph [picks surname], OK? —

Kate: OK.

Turvy: [while doing actions] Copy, go to start of

paragraph, type return, paste, type brackets,

change style to “citation”, OK? — Kate:

OK.

Now Turvy makes a mistake. Kate shows the right action

and Turvy echoes back a new hypothesis reflecting her hint

from earlier on.

Turvy: Type in blank space “88”, OK?

Kate: No, the last two digits at the end.

Turvy: Show me please. — [Kate picks digits.]

Turvy: Two digits before period at end of paragraph?

Kate: Yes.

Turvy: Continue? —

Kate: OK.