HAL Id: hal-00906902

https://hal.inria.fr/hal-00906902

Submitted on 10 Dec 2013

HAL is a multi-disciplinary open access

archive for the deposit and dissemination of sci-

entic research documents, whether they are pub-

lished or not. The documents may come from

teaching and research institutions in France or

abroad, or from public or private research centers.

L’archive ouverte pluridisciplinaire HAL, est

destinée au dépôt et à la diusion de documents

scientiques de niveau recherche, publiés ou non,

émanant des établissements d’enseignement et de

recherche français ou étrangers, des laboratoires

publics ou privés.

Towards understanding action recognition

Hueihan Jhuang, Jurgen Gall, Silvia Zu, Cordelia Schmid, Michael J. Black

To cite this version:

Hueihan Jhuang, Jurgen Gall, Silvia Zu, Cordelia Schmid, Michael J. Black. Towards understanding

action recognition. ICCV - IEEE International Conference on Computer Vision, Dec 2013, Sydney,

Australia. pp.3192-3199, �10.1109/ICCV.2013.396�. �hal-00906902�

Towards understanding action recognition

Hueihan Jhuang

1

Juergen Gall

2

Silvia Zuffi

3

Cordelia Schmid

4

Michael J. Black

1

1

MPI for Intelligent Systems, Germany

2

University of Bonn, Germany,

3

Brown University, USA,

4

LEAR, INRIA, France

Abstract

Although action recognition in videos is widely studied,

current methods often fail on real-world datasets. Many re-

cent approaches improve accuracy and robustness to cope

with challenging video sequences, but it is often unclear

what affects the results most. This paper attempts to pro-

vide insights based on a systematic performance evalua-

tion using thoroughly-annotated data of human actions. We

annotate human Joints for the HMDB dataset (J-HMDB).

This annotation can be used to derive ground truth optical

flow and segmentation. We evaluate current methods using

this dataset and systematically replace the output of various

algorithms with ground truth. This enables us to discover

what is important – for example, should we work on improv-

ing flow algorithms, estimating human bounding boxes, or

enabling pose estimation? In summary, we find that high-

level pose features greatly outperform low/mid level fea-

tures; in particular, pose over time is critical. While current

pose estimation algorithms are far from perfect, features

extracted from estimated pose on a subset of J-HMDB, in

which the full body is visible, outperform low/mid-level fea-

tures. We also find that the accuracy of the action recog-

nition framework can be greatly increased by refining the

underlying low/mid level features; this suggests it is im-

portant to improve optical flow and human detection algo-

rithms. Our analysis and J-HMDB dataset should facilitate

a deeper understanding of action recognition algorithms.

1. Introduction

Current computer vision algorithms fall far below hu-

man performance on activity recognition tasks. While most

computer vision algorithms perform very well on simple

lab-recorded datasets [

31], state-of-the-art approaches still

struggle to recognize actions in more complex videos taken

from public sources like movies [14, 17]. According to [30],

the HMDB51 dataset [

14] is the most challenging dataset

for vision algorithms, with the best method achieving only

48% accuracy. Many things might be limiting current meth-

ods: weak visual cues or lack of high-level cues for exam-

ple. Without a clear understanding of what makes a method

perform well, it is difficult for the field to make progress.

Our goal is twofold. First, towards understanding al-

gorithms for human action recognition, we systematically

analyze a recognition algorithm to better understand the

limitations and to identify components where an algorith-

mic improvement would most likely increase the over-

all accuracy. Second, towards understanding intermediate

data that would support recognition, we present insights on

how much low- to high-level reasoning about the human is

needed to recognize actions.

Such an analysis requires ground truth for a challeng-

ing dataset. We focus on one of the most challenging

datasets for action recognition (HMDB51 [

14]) and on the

approach that achieves the best performance on this dataset

(Dense Trajectories [

30]). From HMDB51, we extract 928

clips comprising 21 action categories and annotate each

frame using a 2D articulated human puppet model [36] that

provides scale, pose, segmentation, coarse viewpoint, and

dense optical flow for the humans in action. An example

annotation is shown in Fig.

1 (a-d). We refer to this dataset

as J-HMDB for “joint-annotated HMDB”.

J-HMDB is valuable in terms of linking low-to-mid-

level features with high-level poses; see Fig. 1 (e-h) for an

illustration. Holistic approaches like [

30] rely on low-level

cues that are sampled from the entire video (e). Dense op-

tical flow within the mask of the person (f) provides more

detailed low-level information. Also, by identifying the per-

son in action and their size, the sampling of the features can

be concentrated on the region of interest (g). Higher-level

pose features require the knowledge of joints (h) but can be

semantically interpreted. Relations between joints (h) pro-

vide richer information and enable more complex models.

Pose has been used in early work on action recogni-

tion [

3, 32]. For a complex dataset such as ours how-

ever, typically low- to mid-level features are used instead

of pose because pose estimation is hard. Recently, hu-

man pose as a feature for action recognition has been revis-

ited [

10, 22, 26, 29, 34]. In [34], it is shown that current ap-

1

low level

(e) baseline

(a) image (b) puppt ow

(f) given puppt ow

(c) puppet mask

(g) given puppet mask

(d) joint positions and relations

(h) given joint positions

u

v

mid level high level

Figure 1. Overview of our annotation and evaluation. (a-d) A video frame annotated by a puppet model [36]. (a) image frame, (b) puppet

flow [35], (c) puppet mask, (d) joint positions and relations. Three types of joint relations are used: 1) distance and 2) orientation of the

vector connecting pairs of joints; i.e. the magnitude and the direction of the vector u. 3) Inner angle spanned by two vectors connecting

triples of joints; i.e. the angle between the two vectors u and v. (e-h) From left to right, we gradually provide the baseline algorithm (e)

with different levels of ground truth from (b) to (d). The trajectories are displayed in green.

proaches for human pose estimation from multiple camera

views are accurate enough for reliable action recognition.

For monocular videos, several works show that current pose

estimation algorithms are reliable enough to recognize ac-

tions on relatively simple datasets [

10, 26, 29], however [22]

shows that they are not good enough to classify fine-grained

activities. Using J-HMDB, we show that ground truth pose

information enables action recognition performance beyond

current state-of-the-art methods.

While our main focus is to analyze the potential impact

of different cues, the dataset is also valuable for evaluat-

ing human pose estimation and human detection in videos.

Our preliminary results show that pose features estimated

from [

33] perform much worse than the ground truth pose

features, but they outperform low/mid level features for ac-

tion recognition on clips where the full body is visible. We

also show that human bounding boxes estimated by [

2] and

optical flow estimated by [

27] do not improve the perfor-

mance of current action recognition algorithms.

2. Related Studies and Datasets

Previous work has analyzed data in detail to understand

algorithm performance in the context of object detection

and image classification. In [

20], a human study of visual

recognition tasks is performed to identify the role of algo-

rithms, data, and features. In [

11], issues like occlusion,

object size, or aspect ratio are examined for two classes of

object detectors. Our work shares with these studies the

idea that analyzing and understanding data is important to

advance the state-of-the-art.

Previous datasets used to benchmark pose estimation or

action recognition algorithms are summarized in Tab.

1.

Existing datasets that contain action labels and pose anno-

tations are typically recorded in a laboratory or static en-

vironment with actors performing specific actions. These

are often unrealistic, resulting in lower intra-class variation

than in real-world videos. While marker-based motion cap-

ture systems provide accurate 3D ground-truth pose data

[

12, 15, 19, 25], they are impractical for recording realis-

tic video data. Other datasets focus on narrow scenarios

[

22, 28]. More realistic datasets for pose estimation and

action recognition have been collected from TV or movie

footage. Commonly considered sources for action recogni-

tion are sport activities [

18], YouTube videos [21], or movie

scenes [

14, 16]. In comparison to sport videos, actions an-

notated from movies are much more challenging as they

present real-world background variation, exhibit more intra-

class variation, and have more appearance variation due

to viewpoint, scale, and occlusion. Since HMDB51 [

14]

is the most challenging dataset among the current movie

datasets [

30], we build on it to create J-HMDB.

J-HMDB is, however, more than a dataset of human ac-

tions; it could also serve as a benchmark for pose estimation

and human detection. Most pose datasets contain images of

a single non-occluded person in the center of the image and

benchmark examples

videos

actions

wild

pose

pose

Buffy stickman [10] y y

ETHZ PASCAL [8] y y

estimation

H3D [2] y y

Leeds Sports [13] y y

VideoPose [24] y y y

action

UCF50 [21] y y y

HMDB51 [14] y y y

recognition

Hollywood2 [17] y y y

Olympics [18] y y y

pose

HumanEvaII [25] y y y

CMU-MMAC [15] y y y

and

Human 3.6M [12] y y y

Berkeley MHAD [19] y y y

action

MPII Cooking [22] y y y

TUM kitchen [28] y y y

J-HMDB y y y y

Table 1. Related datasets.

the approximate scale of the person is known [

8, 10, 13].

These image-based datasets constitute a very small subset

of all the possible variations of human poses and sizes be-

cause the subjects are not performing actions, with the ex-

ception of the Leeds Sports Pose Dataset [

13]. The Video-

Pose2 dataset [

24] contains a number of annotated video

clips taken from two TV series in order to evaluate pose es-

timation approaches on realistic data. The dataset is, how-

ever, limited to upper body pose estimation and contains

very few clips. Our dataset presents a new challenge to the

field of human pose estimation and tracking since it contains

more variation in poses, humans sizes, camera motions, mo-

tion blur, and partial- or full-body visibility.

3. The Dataset

3.1. Selection

The HMDB51 database [

14] contains more than 5,100

clips of 51 different human actions collected from movies

or the Internet. Annotating this entire dataset is imprac-

tical so J-HMDB is a subset with fewer categories. We

excluded categories that contain mainly facial expressions

like smiling, interactions with others such as shaking hands,

and actions that can only be done in a specific way such as

a cartwheel. The result contains 21 categories involving a

single person in action: brush hair, catch, clap, climb stairs,

golf, jump, kick ball, pick, pour, pull-up, push, run, shoot

ball, shoot bow, shoot gun, sit, stand, swing baseball, throw,

walk, wave. Since we focus on and annotate the person in

action in each clip, we remove clips in which the actor is

not obvious. For the remaining clips, we further crop them

in time such that the first and last frame roughly correspond

to the beginning and end of an action. This selection-and-

cleaning process results in 36-55 clips per action class with

each clip containing 15-40 frames. In summary, there are

31,838 annotated frames in total. J-HMDB is available at

http://jhmdb.is.tue.mpg.de.

3.2. Annotation

For annotation, we use a 2D puppet model [

36] in which

the human body is represented as a set of 10 body parts con-

nected by 13 joints (shoulder, elbow, wrist, hip, knee, ankle,

neck) and two landmarks (face and belly). We construct

puppets in 16 viewpoints across the 360 degree radial space

in the transverse plane. We built a graphical user interface

to control the viewpoint and scale and in which the joints

can be selected and moved in the image plane. The annota-

tion involves adjusting the joint position so that the contours

of the puppet align with image information [

36]. In con-

trast to simple joint or limb annotations, the puppet model

guarantees realistic limb size proportions, in particular in

the context of occlusions, and also provides an approximate

2D shape of the human body. The annotated shapes are

then used to compute the 2D optical flow corresponding to

the human motion, which we call “puppet flow” [

35]. The

puppet mask (i.e. the region contained within the puppet) is

also used to initialize GrabCut [23] to obtain a segmentation

mask. Fig.

1 (b-d) shows a sample annotation.

The annotation is done using Amazon Mechanical Turk.

To aid annotators, we provide the posed puppet on the first

frame of each video clip. For each subsequent frame the in-

terface initializes the joint positions and the scale with those

of the previous frame. We manually correct annotation er-

rors during a post-annotation screening process.

In summary, the person performing the action in each

frame is annotated with his/her 2D joint positions, scale,

viewpoint, segmentation, puppet mask and puppet flow.

Details about the annotation interface and the distribution

of joint locations, viewpoints, and scales of the annotations

are provided on the website.

3.3. Training and testing set generation

Training and testing splits are generated as in [

14]. For

each action category, clips are randomly grouped into two

sets with the constraint that the clips from the same video

belong to the same set. We iterate the grouping until the

ratio of the number of clips in the two sets and the ratio

of the number of distinct video sources in the two sets are

both close to 7:3. The 70% set is used for training and the

30% set for testing. Three splits are randomly generated and

the performance reported here is the average of the three

splits. Note that the number of training/testing clips is sim-

ilar across categories and we report the per-video accuracy,

which does not differ much from the per-class accuracy.

4. Study of low-level features

We focus our evaluation on the Dense Trajectories (DT)

algorithm [

30] since it is currently the best performing

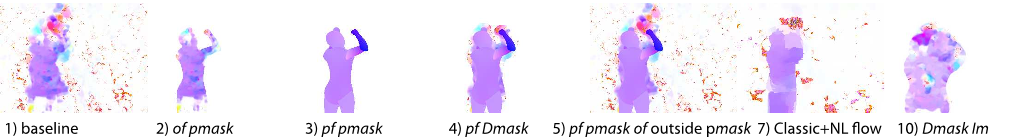

1) baseline 4) pf Dmask3) pf pmask2) of pmask 7) Classic+NL ow5) pf pmask of outside pmask 10) Dmask Im

Figure 2. Comparison of various flow settings. The flow is numbered according to Tab. 2. See Sec. 4.2 and Sec. 5 for details.

method on the HMDB51 database [

14] and because it re-

lies on video feature descriptors that are also used by other

methods. We first review DT in Sec. 4.1, and then we re-

place pieces of the algorithm with the ground truth data to

provide low, mid, and high level information in Sec.

4.2,

Sec.

5 and Sec. 6.2 respectively.

4.1. DT features

The DT algorithm [

30] represents video data by dense

trajectories along with motion and shape features around

the trajectories. The feature points are densely sampled on

each frame using a grid with a spacing of 5 pixels and at

each of the 8 spatial scales which increase by a factor of

1

√

2

.

Feature points are further pruned to keep the ones whose

eigenvalues of the auto-correlation matrix are larger than

some threshold. For each frame, a dense optical flow field

is computed w.r.t. the next frame using the OpenCV imple-

mentation of Gunnar Farneb

¨

ack’s algorithm [

9]. A 3 × 3

median filter is applied to the flow field and this denoised

flow is used to compute the trajectories of selected points

through the 15 frames of the clip.

For each trajectory, L = 5 types of descriptors are com-

puted, where each descriptor is normalized to have unit L

2

norm: Traj: Given a trajectory of length T = 15, the

shape of the trajectory is described by a sequence of dis-

placement vectors, corresponding to the translation along

the x- and y-coordinate across the trajectory. It is further

normalized by the sum of displacement vector magnitudes,

i.e.

(∆P

t

,...,∆P

t+T −1

)

P

t+T −1

j=t

||∆P

j

||

, where ∆P

t

= (x

t+1

− x

t

, y

t+1

− y

t

).

HOG: Histograms of oriented gradients [

5] of 8 bins are

computed in a 32-pixels × 32-pixels × 15-frames spatio-

temporal volume surrounding the trajectory. The volume

is further subdivided into a spatio-temporal grid of size 2-

pixels × 2-pixels × 3-frames. HOF: Histograms of optical

flow [

16] are computed similarly as HOG except that there

are 9 bins with the additional one corresponding to pixels

with optical flow magnitude lower than a threshold. MBH:

Motion boundary histograms [

6] are computed separately

for the horizontal and vertical gradients of the optical flow

(giving two descriptors).

For each descriptor type, a codebook of size N = 4, 000

is formed by running k-means 8 times on a random selection

of M = 100, 000 descriptors and taking the codebook with

the lowest error. The features are computed using the pub-

licly available source code of Dense Trajectories [

30] with

one modification. While in the original implementation, op-

tical flow is computed for each scale of the spatial pyramid,

we compute the flow at the full resolution and build a spatial

pyramid of the flow. While this decreases the performance

on our dataset by less than 1%, it is necessary to fairly eval-

uate the impact of the flow accuracy using the puppet flow,

which is generated at the original video scale.

For classification, a non-linear SVM with RBF-χ

2

kernel, k(x, y), is used and L types of descriptors

are combined in a multi-channel setup as K(i, j) =

exp

−

1

L

P

L

c=1

k(x

c

i

,x

c

j

)

A

c

. Here, x

c

i

is the c-th descriptor

for the i-th video, A

c

is the mean of the χ

2

distance between

the training examples for the c-th channel. The multi-class

classification is done by LIBSVM [

4] using a one-vs-all ap-

proach. The performance is denoted as “baseline” in Tab.

2

(1), and the flow is shown in Fig. 2 (1).

4.2. DT given puppet flow

We can not evaluate the gain of having perfect dense op-

tical flow, and therefore perfect trajectories. Instead, we

use the puppet flow as the ground truth motion in the fore-

ground, i.e. within the puppet mask (pmask). When the

body parts move only slightly from one frame to the next,

the puppets do not always move correspondingly because

small translations are not easily observed and annotated. To

address this, we replace the puppet flow for each body part

that does not move with the flow from the baseline.

To evaluate the quality of the foreground flow, we set

the flow outside pmask to zero to disable tracks outside

the foreground. We compare optical flow (of ) computed

by Farneb

¨

ack’s method and puppet flow (pf ), as shown in

Fig.

2 (2-3). Masking optical flow results in a 4 percentage

points (pp) gain over the baseline, and masking puppet flow

gives a 6 pp gain (Tab.

2 (2-3)). The gain mainly comes

from HOF and MBH.

We dilate the puppet mask to include the narrow strip

surrounding the person’s contour, called Dmask. The width

is scale dependent, ranging from 1 to 10 pixels with an av-

erage width of 6 pixels. Since the puppet flow is not defined

outside the puppet mask, of is used on the narrow strip,

as shown in Fig.

2 (4). Using Dmask increases the perfor-

mance of (3) by 2.3 pp (Tab.

2 (4) vs. (3)). Comparing Fig. 2

(3) and (4), the latter has clear flow discontinuities caused

![Figure 1. Overview of our annotation and evaluation. (a-d) A video frame annotated by a puppet model [36]. (a) image frame, (b) puppet flow [35], (c) puppet mask, (d) joint positions and relations. Three types of joint relations are used: 1) distance and 2) orientation of the vector connecting pairs of joints; i.e. the magnitude and the direction of the vector u. 3) Inner angle spanned by two vectors connecting triples of joints; i.e. the angle between the two vectors u and v. (e-h) From left to right, we gradually provide the baseline algorithm (e) with different levels of ground truth from (b) to (d). The trajectories are displayed in green.](/figures/figure-1-overview-of-our-annotation-and-evaluation-a-d-a-3jrgh78x.png)