1532 IEEE TRANSACTIONS ON IMAGE PROCESSING, VOL. 9, NO. 9, SEPTEMBER 2000

Adaptive Wavelet Thresholding for Image Denoising

and Compression

S. Grace Chang, Student Member, IEEE, Bin Yu, Senior Member, IEEE, and Martin Vetterli, Fellow, IEEE

Abstract—The first part of this paper proposes an adaptive,

data-driven threshold for image denoising via wavelet soft-thresh-

olding. The threshold is derived in a Bayesian framework, and the

prior used on the wavelet coefficients is the generalized Gaussian

distribution (GGD) widely used in image processing applications.

The proposed threshold is simple and closed-form, and it is adap-

tive to each subband because it depends on data-driven estimates

of the parameters. Experimental results show that the proposed

method, called BayesShrink, is typically within 5% of the MSE

of the best soft-thresholding benchmark with the image assumed

known. It also outperforms Donoho and Johnstone’s SureShrink

most of the time.

The second part of the paper attempts to further validate

recent claims that lossy compression can be used for denoising.

The BayesShrink threshold can aid in the parameter selection

of a coder designed with the intention of denoising, and thus

achieving simultaneous denoising and compression. Specifically,

the zero-zone in the quantization step of compression is analogous

to the threshold value in the thresholding function. The remaining

coder design parameters are chosen based on a criterion derived

from Rissanen’s minimum description length (MDL) principle.

Experiments show that this compression method does indeed re-

move noise significantly, especially for large noise power. However,

it introduces quantization noise and should be used only if bitrate

were an additional concern to denoising.

Index Terms—Adaptive method, image compression, image de-

noising, image restoration, wavelet thresholding.

I. INTRODUCTION

A

N IMAGE is often corrupted by noise in its acquisition or

transmission. The goal of denoising is to remove the noise

while retaining as much as possible the important signal fea-

tures. Traditionally, this is achieved by linear processing such

as Wiener filtering. A vast literature has emerged recently on

Manuscript received January 22, 1998; revised April 7, 2000. This work

was supported in part by the NSF Graduate Fellowship and the Univer-

sity of California Dissertation Fellowship to S. G. Chang; ARO Grant

DAAH04-94-G-0232 and NSF Grant DMS-9322817 to B. Yu; and NSF Grant

MIP-93-213002 and Swiss NSF Grant 20-52347.97 to M. Vetterli. Part of this

work was presented at the IEEE International Conference on Image Processing,

Santa Barbara, CA, October 1997. The associate editor coordinating the

review of this manuscript and approving it for publication was Prof. Patrick L.

Combettes.

S.G. Chang was with the Department of Electrical Engineering and Computer

Sciences, University of California, Berkeley, CA 94720 USA. She is now with

Hewlett-Packard Company, Grenoble, France (e-mail: grchang@yahoo.com).

B. Yu is with the Department of Statistics, University of California, Berkeley,

CA 94720 USA (e-mail: binyu@stat.berkeley.edu)

M. Vetterli is with the Laboratory of Audiovisual Communications, Swiss

Federal Institute of Technology (EPFL), Lausanne, Switzerland and also with

the Department of Electrical Engineering and Computer Sciences, University of

California, Berkeley, CA 94720 USA.

Publisher Item Identifier S 1057-7149(00)06914-1.

signal denoising using nonlinear techniques, in the setting of

additive white Gaussian noise. The seminal work on signal de-

noising via wavelet thresholding or shrinkage of Donoho and

Johnstone ([13]–[16]) have shown that various wavelet thresh-

olding schemes for denoising have near-optimal properties in

the minimax sense and perform well in simulation studies of

one-dimensional curve estimation. It has been shown to have

better rates of convergence than linear methods for approxi-

mating functions in Besov spaces ([13], [14]). Thresholding is

a nonlinear technique, yet it is very simple because it operates

on one wavelet coefficient at a time. Alternative approaches to

nonlinear wavelet-baseddenoising can be found in, for example,

[1], [4], [8]–[10], [12], [18], [19], [24], [27]–[29], [32], [33],

[35], and references therein.

On a seemingly unrelated front, lossy compression has been

proposed for denoising in several works [6], [5], [21], [25],

[28]. Concerns regarding the compression rate were explicitly

addressed. This is important because any practical coder must

assume a limited resource (such as bits) at its disposal for repre-

senting the data. Other works [4], [12]–[16] also addressed the

connection between compression and denoising, especially with

nonlinear algorithms such as wavelet thresholding in a mathe-

matical framework. However, these latter works were not con-

cerned with quantization and bitrates: compression results from

a reduced number of nonzero wavelet coefficients, and not from

an explicit design of a coder.

The intuition behind using lossy compression for denoising

may be explained as follows. A signal typically has structural

correlations that a good coder can exploit to yield a concise rep-

resentation. White noise, however, does not have structural re-

dundancies and thus is not easily compressable. Hence, a good

compression method can provide a suitable model for distin-

guishing between signal and noise. The discussion will be re-

stricted to wavelet-based coders, though these insights can be

extended to other transform-domain coders as well. A concrete

connection between lossy compression and denoising can easily

be seen when one examines the similarity between thresholding

and quantization, the latter of which is a necessarystep in a prac-

tical lossy coder. That is,the quantization of wavelet coefficients

with a zero-zone is an approximation to the thresholding func-

tion (see Fig. 1). Thus, provided that the quantization outside

of the zero-zone does not introduce significant distortion, it fol-

lows that wavelet-based lossy compression achieves denoising.

With this connection in mind, this paper is about wavelet thresh-

olding for image denoising and also for lossy compression. The

threshold choice aids the lossy coder to choose its zero-zone,

and the resulting coder achieves simultaneous denoising and

compression if such property is desired.

1057–7149/00$10.00 © 2000 IEEE

CHANG et al.: ADAPTIVE WAVELET THRESHOLDING FOR IMAGE DENOISING AND COMPRESSION 1533

Fig. 1. Thresholding function can be approximated by quantization with a

zero-zone.

Thetheoreticalformalization offiltering additiveiid Gaussian

noise (of zero-mean and standard deviation

) via thresholding

wavelet coefficients was pioneered by Donoho and Johnstone

[14]. A wavelet coefficient is compared to a given threshold and

issetto zeroifits magnitudeisless thanthethreshold; otherwise,

it is kept or modified (depending on the thresholding rule). The

threshold acts as an oracle which distinguishes between the

insignificant coefficients likely due to noise, and the significant

coefficients consisting of important signal structures. Thresh-

olding rules are especially effective for signals with sparse or

near-sparserepresentationswhereonlyasmallsubsetofthecoef-

ficients represents all or most of the signal energy. Thresholding

essentially creates a region around zero where the coefficients

are considered negligible.Outside of this region, the thresholded

coefficients are kept to full precision (that is, without quanti-

zation). Their most well-known thresholding methods include

VisuShrink [14] and SureShrink [15]. These threshold choices

enjoy asymptotic minimax optimalities over function spaces

such as Besovspaces.Forimage denoising, however, VisuShrink

is known to yield overly smoothed images. This is because its

thresholdchoice,

(calledtheuniversalthreshold and

is the noise variance), can be unwarrantedly large due to its

dependenceonthenumberofsamples,

,whichismorethan

foratypicaltestimageofsize .SureShrinkusesahybrid

of the universal threshold and the SURE threshold, derivedfrom

minimizing Stein’s unbiased risk estimator [30], and has been

showntoperformwell.SureShrinkwillbethemaincomparisonto

the method proposed here, and,as will be seen later in this paper,

ourproposedthresholdoftenyieldsbetterresult.

Since the works of Donoho and Johnstone, there has been

much research on finding thresholds for nonparametric estima-

tion in statistics. However, few are specifically tailored for im-

ages. In this paper, we propose a framework and a near-op-

timal threshold in this framework more suitable for image de-

noising. This approach can be formally described as Bayesian,

but this only describes our mathematical formulation, not our

philosophy. The formulation is grounded on the empirical ob-

servation that the wavelet coefficients in a subband of a natural

image canbe summarizedadequatelybyageneralized Gaussian

distribution (GGD). This observation is well-accepted in the

image processing community (for example, see [20], [22], [23],

[29], [34], [36]) and is used for state-of-the-art image coders

in [20], [22], [36]. It follows from this observation that the av-

erage MSE (in a subband) can be approximated by the corre-

sponding Bayesian squared error risk with the GGD as the prior

applied to each in an iid fashion. That is, a sum is approximated

by an integral. We emphasize that this is an analytical approx-

imation and our framework is broader than assuming wavelet

coefficients are iid draws from a GGD. The goal is to find the

soft-threshold that minimizes this Bayesian risk, and we call our

method BayesShrink.

The proposed Bayesian risk minimization is subband-depen-

dent. Given the signal being generalized Gaussian distributed

and the noise being Gaussian, via numerical calculation a nearly

optimal threshold for soft-thresholding is found to be

(where is the noise variance and the signal vari-

ance). This threshold gives a risk within 5% of the minimal risk

over a broad range of parameters in the GGD family. To make

this threshold data-driven, the parameters

and are esti-

mated from the observed data, one set for each subband.

To achieve simultaneous denoising and compression, the

nonzero thresholded wavelet coefficients need to be quantized.

Uniform quantizer and centroid reconstruction is used on the

GGD. The design parameters of the coder,such as the number of

quantizationlevelsandbinwidths,aredecidedbasedonacriterion

derived from Rissanen’s minimum description length (MDL)

principle [26]. This criterion balances the tradeoff between the

compression rate and distortion, and yields a nice interpretation

ofoperatingatafixedslopeontherate-distortioncurve.

The paper is organized as follows. In Section II, the wavelet

thresholding idea is introduced. Section II-A explains the

derivation of the BayesShrink threshold by minimizing a

Bayesian risk with squared error. The lossy compression based

on the MDL criterion is explained in Section III. Experimental

results on several test images are shown in Section IV and

compared with SureShrink. To benchmark against the best

possible performance of a threshold estimate, the compar-

isons also include OracleShrink, the best soft-thresholding

estimate obtainable assuming the original image known, and

OracleThresh, the best hard-thresholding counterpart. The

BayesShrink method often comes to within 5% of the MSEs

of OracleShrink, and is better than SureShrink up to 8% most

of the time, or is within 1% if it is worse. Furthermore, the

BayesShrink threshold is very easy to compute. BayesShrink

with the additional MDL-based compression, as expected,

introduces quantization noise to the image. This distortion may

negate the denoising achieved by thresholding, especially when

is small. However, for larger values of , the MSE due to the

lossy compression is still significantly lower than that of the

noisy image, while fewer bits are used to code the image, thus

achieving both denoising and compression.

II. W

AVELET THRESHOLDING AND THRESHOLD SELECTION

Let the signal be , where is some

integer power of 2. It has been corrupted by additive noise and

one observes

(1)

where

are independent and identically distributed ( )

as normal

and independent of . The goal is to

remove the noise, or “denoise”

, and to obtain an estimate

of which minimizes the mean squared error (MSE),

MSE

(2)

1534 IEEE TRANSACTIONS ON IMAGE PROCESSING, VOL. 9, NO. 9, SEPTEMBER 2000

Fig. 2. Subbands of the 2-D orthogonal wavelet transform.

Let , , and ; that is,

the boldfaced letters will denote the matrix representation of the

signals under consideration. Let

denote the matrix

of wavelet coefficients of

, where is the two-dimensional

dyadic orthogonal wavelet transform operator, and similarly

and . The readers are referred to references such as

[23], [31] for details of the two-dimensional orthogonal wavelet

transform. It is convenient to label the subbands of the transform

as in Fig. 2. The subbands

, , ,

are called the details, where is the scale, with being the

largest (or coarsest) scale in the decomposition, and a subband

at scale

has size . The subband is the low

resolution residual, and

is typically chosen large enough such

that

and . Note that since the transform

is orthogonal,

are also iid .

The wavelet-thresholding denoising method filters each co-

efficient

from the detail subbands with a threshold function

(to be explained shortly) to obtain

. The denoised estimate is

then

, where is the inverse wavelet transform

operator.

There are two thresholding methods frequently used. The

soft-threshold function (also called the shrinkage function)

sgn (3)

takes the argument and shrinks it toward zero by the threshold

. The other popular alternative is the hard-threshold function

(4)

which keeps the input if it is larger than the threshold

; oth-

erwise, it is set to zero. The wavelet thresholding procedure re-

moves noise by thresholding only the wavelet coefficients of the

detail subbands, while keeping the low resolution coefficients

unaltered.

The soft-thresholding rule is chosen over hard-thresholding

for several reasons. First, soft-thresholding has been shown to

achieve near-optimal minimax rate over a large range of Besov

spaces [12], [14]. Second, for the generalized Gaussian prior

assumed in this work, the optimal soft-thresholding estimator

yields a smaller risk than the optimal hard-thresholding esti-

mator (to be shown later in this section). Lastly, in practice, the

soft-thresholding method yields more visually pleasant images

over hard-thresholding because the latter is discontinuous and

yields abrupt artifacts in the recovered images, especially when

the noise energy is significant. In what follows, soft-thresh-

olding will be the primary focus.

While the idea of thresholding is simple and effective,

finding a good threshold is not an easy task. For one-dimen-

sional (1-D) deterministic signal of length

, Donoho and

Johnstone [14] proposed for VisuShrink the universal threshold,

, which results in an estimate asymptotically

optimal in the minimax sense (minimizing the maximum

error over all possible

-sample signals). One other notable

threshold is the SURE threshold [15], derived from minimizing

Stein’s unbiased risk estimate [30] when soft-thresholding

is used. The SureShrink method is a hybrid of the universal

and the SURE threshold, with the choice being dependent

on the energy of the particular subband [15]. The SURE

threshold is data-driven, does not depend on

explicitly,

and SureShrink estimates it in a subband-adaptive manner.

Moreover, SureShrink has yielded good image denoising

performance and comes close to the true minimum MSE of the

optimal soft-threshold estimator (cf. [4], [12]), and thus will be

the main comparison to our proposed method.

In the statistical Bayesian literature, many works have con-

centrated on deriving the best threshold (or shrinkage factor)

based on priors such as the Laplacian and a mixture of Gaus-

sians (cf. [1], [8], [9], [18], [24], [27], [29], [32], [35]). With an

integral approximation to the pixel-wise MSE distortion mea-

sure as discussed earlier, the formulation here is also Bayesian

for finding the best soft-thresholding rule under the general-

ized Gaussian prior. A related work is [27] where the hard-

thresholding rule is investigated for signals with Laplacian and

Gaussian distributions.

The GGD has been used in many subband or wavelet-based

image processing applications [2], [20], [22], [23], [29], [34],

[36]. In [29], it was observed that a GGD with the shape param-

eter

ranging from 0.5 to 1 [see (1)] can adequately describe the

wavelet coefficients of a large set of natural images. Our expe-

rience with images supports the same conclusion. Fig. 3 shows

the histogram of the wavelet coefficients of the images shown in

Fig. 9, against the generalized Gaussian curve, with the param-

eters labeled (the estimation of the parameters will be explained

later in the text.) A heuristic can be set forward to explain why

there are a large number of “small” coefficients but relatively

few “large” coefficients as the GGD suggests: the small ones

correspond to smooth regions in a natural image and the large

ones to edges or textures.

A. Adaptive Threshold for BayesShrink

The GGD, following [20], is

(5)

, , , where

and

CHANG et al.: ADAPTIVE WAVELET THRESHOLDING FOR IMAGE DENOISING AND COMPRESSION 1535

(a) (b)

(c) (d)

Fig. 3. Histogram of the wavelet coefficients of four test images. For each image, from top to bottom it is fine to coarse scales: from left to right, they are the

HH

,

HL

, and

LH

subbands, respectively.

and is the gamma function. The pa-

rameter

is the standard deviation and is the shape pa-

rameter. For a given set of parameters, the objective is to find

a soft-threshold

which minimizes the Bayes risk,

(6)

where

, and .

Denote the optimal threshold by

,

(7)

which is a function of the parameters

and . To our knowl-

edge, there is no closed form solution for

for this chosen

prior, thus numerical calculation is used to find its value.

1

Before examining the general case, it is insightful to consider

two special cases of the GGD: the Gaussian (

) and the

1

It was observed that for the numerical calculation, it is more robust to obtain

the value of

T

from locating the zero-crossing of the derivative,

r

(

T

)

, than

from minimizing

r

(

T

)

directly. In the Laplacian case, a recent work [17] derives

an analytical equation for

T

to satisfy and calculates

T

by solving such an

equation.

Laplacian ( ) distributions. The Laplacian case is particu-

larly interesting, because it is analytically more tractable and is

often used in image processing applications.

Case 1: (Gaussian)

with .Itis

straightforward to verify that

(8)

(9)

where

(10)

with the standard normal density function

and the survival function of the

standard normal

.

1536 IEEE TRANSACTIONS ON IMAGE PROCESSING, VOL. 9, NO. 9, SEPTEMBER 2000

(a)

(b)

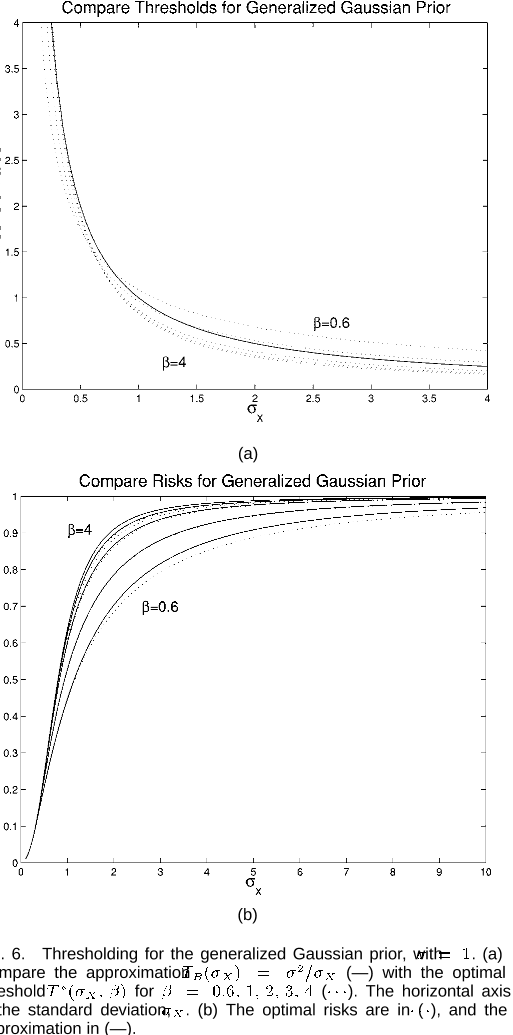

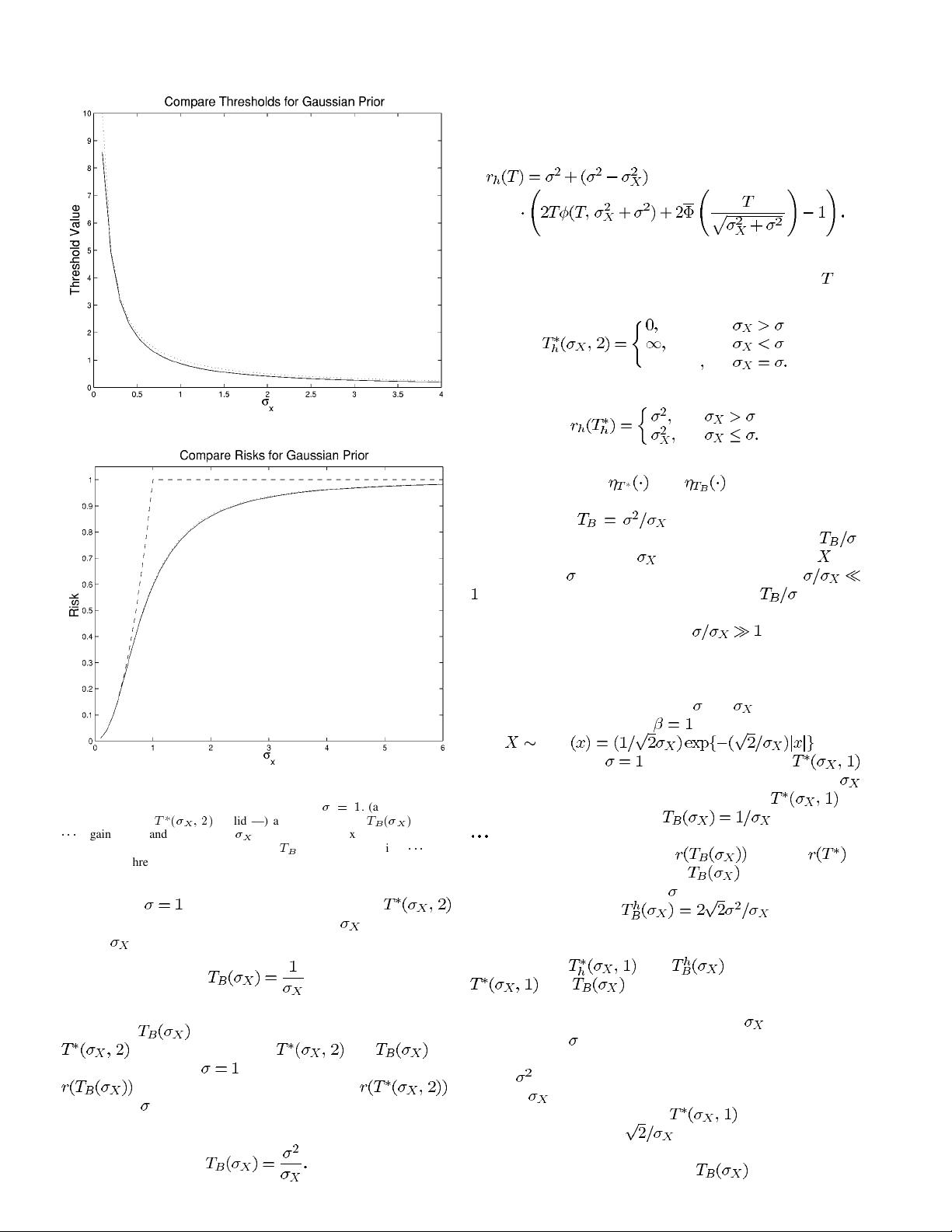

Fig. 4. Thresholding for the Gaussian prior, with

=1

. (a) Compare the

optimal threshold

T

(

;

2)

(solid —) and the threshold

T

(

)

(dotted

111

) against the standard deviation

on the horizontal axis. (b) Compare the

risk of using optimal soft-thresholding (—),

T

for soft-thresholding (

111

), and

optimal hard-thresholding (- - -).

Assuming for the time being, the value of

is found numerically for different values of and is plotted

against

in Fig. 4(a), with

(11)

superimposed on top. It is clear that this simple and closed-form

expression,

, is very close to the numerically found

. The expected risks of and are

shown in Fig. 4(b) for

, where the maximum deviation of

is less than 1% of the optimal risk, .

For general

, it is an easy scaling exercise to see that (11)

becomes

(12)

For a further comparison, the risk for hard-thresholding is

also calculated. After some algebra, it can be shown that the

risk for hard-thresholding is

(13)

By setting to zero the derivative of (13) with respect to

, the

optimal threshold is found to be

if

if

anything if

(14)

with the associated risk

if

if

(15)

Fig. 4(b) shows that both the optimal and near-optimal soft-

threshold estimators,

and , achieve lower risks

than the optimal hard-threshold estimator.

The threshold

is not only nearly optimal but

also has an intuitive appeal. The normalized threshold,

,

is inversely proportional to

, the standard deviation of , and

proportional to

, the noise standard deviation. When

, the signal is much stronger than the noise, is chosen

to be small in order to preserve most of the signal and remove

some of the noise; vice versa, when

, the noise dom-

inates and the normalized threshold is chosen to be large to re-

move the noise which has overwhelmed the signal. Thus, this

threshold choice adapts to both the signal and noise character-

istics as reflected in the parameters

and .

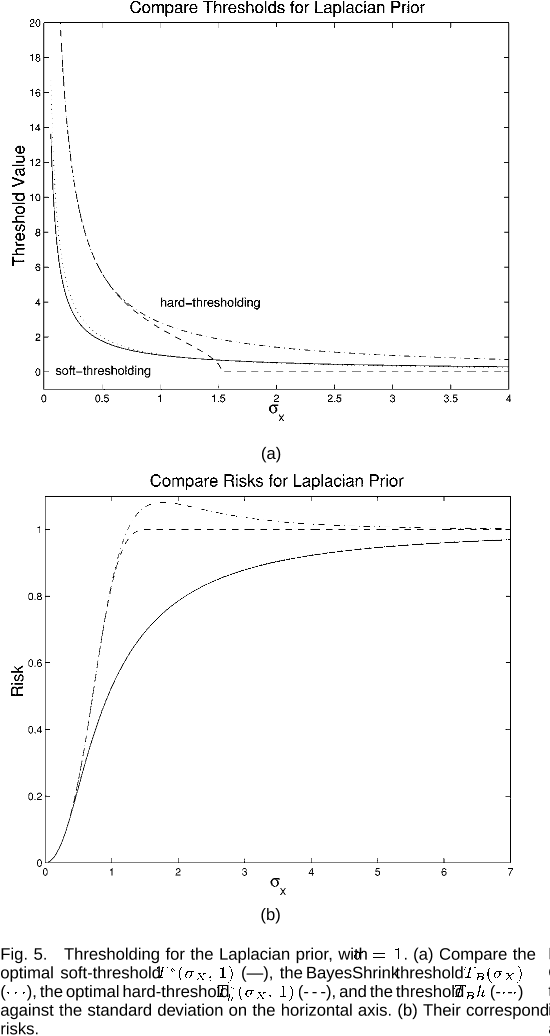

Case 2: (Laplacian) With

, the GGD becomes Lapla-

cian:

LAP . Again

for the time being let

. The optimal threshold

found numerically is plotted against the standard deviation

on the horizontal axis in Fig. 5(a). The curve of (in

solid line —) is compared with

(in dotted line

) in Fig. 5(a). Their corresponding expected risks are shown

in Fig. 5(b), and the deviation of

from the is

less than 0.8%. This suggests that

also works well in

the Laplacian case. For general

, (12) holds again.

The threshold choice

was found in-

dependently in [27] for approximating the optimal hard-thresh-

olding using the Laplacian prior. Fig. 5(a) compares the optimal

hard-threshold,

, and to the soft-thresholds

and . The corresponding risks are plotted

in Fig. 5(b), which shows the soft-thresholding rule to yield a

lower risk for this chosen prior. In fact, for

larger than ap-

proximately 1.3

, the risk of the approximate hard-threshold is

worse than if no thresholding were performed (which yields a

risk of

).

When

tends to infinity or the SNR is going to infinity,

an asymptotic approximation of

is derived in [17] in

this Laplacian case to be

. However, in the same article,

this asymptotic approximation is outperformed in seven test im-

ages by the our proposed threshold,

.