Roffo, G., Melzi, S., Castellani, U. and Vinciarelli, A. (2017) Infinite Latent

Feature Selection: A Probabilistic Latent Graph-Based Ranking Approach.

In: IEEE International Conference on Computer Vision (ICCV 2017),

Venice, Italy, 22-29 Oct 2017, pp. 1407-

1415.(doi:10.1109/ICCV.2017.156)

This is the author’s final accepted version.

There may be differences between this version and the published version.

You are advised to consult the publisher’s version if you wish to cite from

it.

http://eprints.gla.ac.uk/149366/

Deposited on: 06 October 2017

Enlighten – Research publications by members of the University of Glasgow

http://eprints.gla.ac.uk

Infinite Latent Feature Selection:

A Probabilistic Latent Graph-Based Ranking Approach

Giorgio Roffo

University of Glasgow

Giorgio.Roffo@Glasgow.ac.uk

Simone Melzi

University of Verona

Simone.Melzi@univr.it

Umberto Castellani

University of Verona

Umberto.Castellani@univr.it

Alessandro Vinciarelli

University of Glasgow

Alessandro.Vinciarelli@Glasgow.ac.uk

Abstract

Feature selection is playing an increasingly significant

role with respect to many computer vision applications

spanning from object recognition to visual object tracking.

However, most of the recent solutions in feature selection

are not robust across different and heterogeneous set of

data. In this paper, we address this issue proposing a ro-

bust probabilistic latent graph-based feature selection al-

gorithm that performs the ranking step while considering

all the possible subsets of features, as paths on a graph,

bypassing the combinatorial problem analytically. An ap-

pealing characteristic of the approach is that it aims to dis-

cover an abstraction behind low-level sensory data, that is,

relevancy. Relevancy is modelled as a latent variable in a

PLSA-inspired generative process that allows the investiga-

tion of the importance of a feature when injected into an

arbitrary set of cues. The proposed method has been tested

on ten diverse benchmarks, and compared against eleven

state of the art feature selection methods. Results show that

the proposed approach attains the highest performance lev-

els across many different scenarios and difficulties, thereby

confirming its strong robustness while setting a new state of

the art in feature selection domain.

1. Introduction

Performance of machine learning methods is heavily de-

pendent on the choice of features on which they are ap-

plied. Different features can entangle and hide the differ-

ent explanatory factors of variation behind the data. Fea-

ture Selection (FS) aims at improving the performance of a

prediction system, allowing faster and more cost-effective

models, while providing a better understanding of the in-

herent regularities in data. In the recent computer vision

literature there are many scenarios where FS is a crucial op-

eration [5, 30, 10, 13, 24, 28]. From multiview face recog-

nition [13] where FS is used to speed up the multiview face

recognition process and to maintain the generalization per-

formance, to object recognition [30], until real-time visual

object tracking [28, 25] where FS dynamically identifies

discriminative features that help in handling the appearance

variability of the target by improving tracking performance.

In this paper, we propose a probabilistic latent graph-

based feature selection algorithm that performs the ranking

step by considering all the possible subsets of features ex-

ploiting the convergence properties of power series of ma-

trices. We map the feature selection problem to an affinity

graph (e.g., feature ≈ node), and then we consider a subset

of features as a path connecting set of nodes. An appeal-

ing characteristic of the approach is that the importance of

a given feature is modelled as a conditional probability of a

latent variable and features, namely P (z|f ). Our approach

aims to model an important hidden variable behind data,

that is, relevancy in features. Raw values are observable

while relevancy to a particular task is not (e.g., in classifica-

tion), therefore, relevancy is modelled as an abstract latent

variable. In particular, our approach consists of three main

parts:

• Pre-processing: a quantization process is applied on

raw feature distributions ~x

i

, mapping their values to

a countable nominal smaller set of tokens. The pre-

processing step assigns a descriptor f

i

to each raw fea-

ture ~x

i

.

• Graph-Weighting: we build an undirected fully-

connected graph, where nodes correspond, one by one,

to each feature f

i

, and each weighted edge among

f

i

f

j

models the probability that features x

i

and x

j

are relevant. Weights are learnt automati-

cally by a learning framework based on a variation of

the probabilistic latent semantic analysis (PLSA) tech-

nique [21], which models the probability of each co-

1

occurrence in f

i

, f

j

as a mixture of conditionally in-

dependent multinomial distributions. Parameters are

estimated using the Expectation Maximization (EM)

algorithm.

• Ranking: the ranking step is done following the idea

of the Infinite Feature Selection (Inf-FS) [30], that con-

siders all the possible paths among nodes investigating

the redundancy of any features when injected into ar-

bitrary sets of cues.

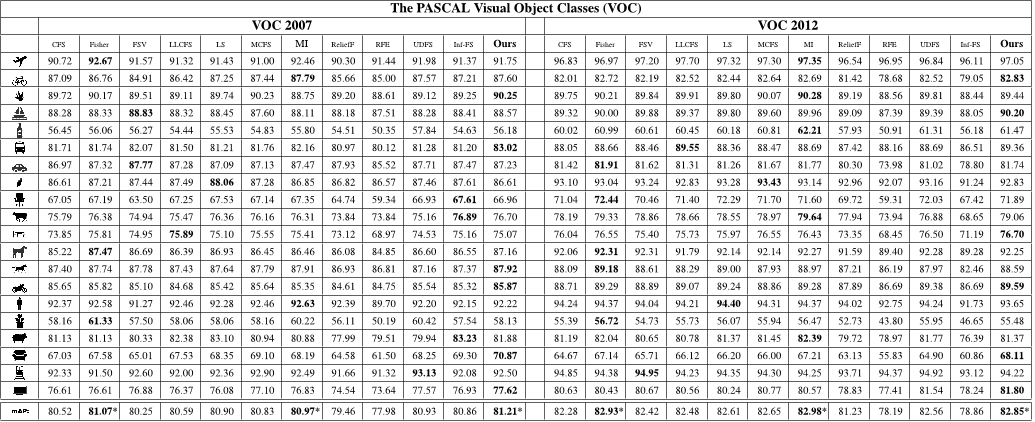

The proposed method is compared against 11 state of

the art feature selection methods selected from recent lit-

erature in the machine learning and pattern recognition do-

mains, reporting results for a total of 576 unique tests (note,

the source code is available at Matlab-Central). We se-

lected 10 publicly available benchmarks of cancer classifi-

cation and prediction on DNA microarray data (Colon [32],

Lymphoma [14], Leukemia [14], Lung [15], Prostate [1]),

handwritten character recognition (GINA [2]), text classi-

fication from the NIPS feature selection challenge (DEX-

TER [18]), and a movie reviews corpus for sentiment

analysis (POLARITY [26]). More extensively, two object

recognition datasets have been taken into account (PAS-

CAL VOC 2007-2012 [11, 12]). Results show that the pro-

posed approach represents the most robust algorithm, which

achieves the highest level of performance across many dif-

ferent domains and challenging scenarios.

The rest of the paper is organized as follows: Sec. 2 illus-

trates the related literature, mostly focusing on the compara-

tive approaches we consider in this study. Sec. 3 details the

proposed approach, also giving a formal justification and

interpretation based on absorbing Markov chain (Sec. 3.4).

Extensive experiments are reported in Sec. 4, and, finally,

in Sec. 5, conclusions are given, and future perspectives are

envisaged.

2. Related Work

Since the mid-1990s, few domains used more than 20

features. The situation has changed considerably in the past

few years and most papers explore domains with hundreds

to tens of thousands of features. New approaches were pro-

posed to address these challenging tasks involving many ir-

relevant and redundant variables and often comparably few

training examples. Typically, FS techniques are partitioned

into three classes [19]: Filters, Wrappers and Embedded

methods. The proposed approach is a filter method, which

analyzes intrinsic properties of data, ignoring the type of

classifier. Conversely, wrappers use classifiers to score a

given subset of features, and embedded methods inject the

selection process directly into the learning process of the

classification framework.

Among the most used filter-based strategies, Relief-

F [23] is an iterative, randomized, and supervised approach

that estimates the quality of the features according to how

well their values differentiate data samples that are near to

each other. Another effective yet fast filter method is the

Fisher method [17], which computes a score for a feature

as the ratio of inter-class separation and intra-class vari-

ance, where features are evaluated independently. A Mu-

tual Information based approach (MI) is proposed in [35].

MI considers as a selection criterion the mutual informa-

tion between the distribution of the values of a given fea-

ture and the membership to a particular class. Even in the

last case, features are evaluated independently, and the final

feature selection occurs by aggregating the m top ranked

ones. In unsupervised learning scenarios, a widely used

method is the Laplacian Score (LS) [20], where the im-

portance of a feature is evaluated by its power of locality

preserving. In order to model the local geometric struc-

ture, this method constructs a nearest neighbor graph. LS

algorithm seeks those features that respect this graph struc-

ture. The unsupervised feature selection for multi-cluster

data is denoted MCFS in [8], which selects those features

such that the multi-cluster structure of the data can be best

preserved. [34] proposed a L2,1-norm regularized discrim-

inative feature selection for unsupervised learning (UDFS)

which selects the most discriminative feature subset from

the whole feature set in batch mode. Feature selection

and kernel learning for local learning-based clustering (LL-

CFS) [36] associates a weight to each feature and incorpo-

rates it into the built-in regularization of the LLC algorithm

to take into account the relevance of each feature for the

clustering. In the experiments, we also compare our ap-

proach against the unsupervised graph-based filter method

dubbed Inf-FS [30]. In the Inf-FS formulation, each feature

is a node in the graph, a path is a selection of features, and

the higher the centrality score, the most important (or most

different) the feature. Another widely used FS method is

SVM-RFE (RFE) [19], which is a wrapper method that se-

lects features in a sequential, backward elimination manner,

ranking high a feature if it strongly separates the samples by

means of a linear SVM. Finally, for the embedded methods,

the feature selection via concave minimization (FSV) [7]

is a popular FS strategy, where the selection process is in-

jected into the training of an SVM by a linear programming

technique. For further information, please see Tab. 2.

3. Our Approach

Given a training set X represented as a set of feature dis-

tributions X = {~x

1

, ..., ~x

n

}, where each m × 1 vector ~x

i

is the distribution of the values assumed by the i

th

feature

with regards to the m samples, we build an undirected graph

G, where nodes correspond to features and edges model re-

lationships among pairs of nodes. Let the adjacency matrix

A associated to G defining the nature of the weighted edges:

each element a

ij

of A, 1 ≤ i, j ≤ n, models pairwise re-

lationships between the features. Each weight represents

the likelihood that features ~x

i

and ~x

j

are good candidates.

Weights can be associated to a binary function of the graph

nodes:

a

ij

= ϕ(~x

i

, ~x

j

), (1)

where ϕ(·, ·) is a real-valued potential function learned

by the proposed approach in a PLSA-inspired framework.

The learning framework models the probability of each co-

occurrence in ~x

i

, ~x

j

as a mixture of conditionally indepen-

dent multinomial distributions, where parameters are learnt

using the EM algorithm. Given the weighted graph G, the

proposed approach analyses subsets of features as paths

connecting them. The cost of each path is given by the joint

probability of all the nodes belonging to it. The method ex-

ploits the convergence property of the power series of ma-

trices as in [30], and evaluates in an elegant fashion the rele-

vance of each feature with respect to all the other ones taken

together. For this reason, we dub our approach infinite la-

tent feature selection (ILFS).

3.1. Discriminative Quantization process

Since the amount of possible distinct values in ~x

i

is huge,

we map this large set of values to a countable smaller set,

hereinafter referred to as set of tokens. Tokens are the words

of our dictionary of features. Thus, each feature will be

represented by a new low-dimensional vocabulary of mean-

ingful tokens. The way used to assign each value to a spe-

cific token is based on a quantization process, we called dis-

criminative quantization (DQ). The rationale behind the DQ

process is to take into account how well a given feature is

representative of a class before performing the many-to-few

mapping.

Firstly, the Fisher criterion is used to compute a scoring

vector Φ = [·, ..., ·] which takes into account both means

and standard deviations of the classes, for each sample and

feature. In binary classification scenarios, this is given by

Φ =

1

Z

h

(s − µ

1

)

2

σ

2

1

+ σ

2

2

,

(s − µ

2

)

2

σ

2

1

+ σ

2

2

i

, (2)

where s is a sample from the i

th

feature ~x

i

, µ

k

and σ

k

denote the mean and standard deviation of class k, respec-

tively. A normalization factor Z is introduced to ensure that

the scores are a valid distribution over both classes. A nat-

ural generalization of these scores into a multi-class frame-

work is given by

Φ =

1

Z

h

(s − µ

1

)

2

P

K

k=1

σ

2

k

, ...,

(s − µ

K

)

2

P

K

k=1

σ

2

k

i

, ∀

k∈K

(3)

where K is the number of classes, s is a single sample from

the i

th

feature. Therefore, considering all the samples, Φ

results to be a m × K matrix.

Now, let us assume that the sample s belongs to class k.

If ~x

i

is a strong discriminant feature, s will score high at

f

z t

n

Features Latent Variables

Tokens

t

1

t

2

t

6

z

1

𝑅𝑒𝑙𝑒𝑣𝑎𝑛𝑐𝑦

z

2

𝐼𝑟𝑟𝑒𝑙𝑒𝑣𝑎𝑛𝑐𝑦

f

1

f

2

f

n

(a)

(b)

Figure 1. Illustration of the general structure of the model. (a) The

intermediate layer of latent topics that links the features and the

tokens. (b) The graphical model using plate representation.

Φ

k

. Then, we derive our priors π by extracting Φ scores for

each feature according to the ground truth as follows:

π = diag(ΦY )

where Y is the 1-of-K representation of the ground truth. It

is a particularly convenient representation where the class

labels are represented by K-dimensional vectors in which

one of the elements equals 1, and all remaining elements

equal 0. As a result, π ∈ [0, 1] is a 1 × m vector containing

a score for each element of a particular feature i. It takes

into account how well each element is represented by the

feature i according to Eq.3.

Finally, quantization is performed. The first step is to di-

vide the entire range of values [0, 1] into a series of T inter-

vals (i.e., we use T = 6 in this work: interval 1 corresponds

to not-well-represented samples, and interval 6 is associated

to well-represented samples). Secondly, we assign a token

to values falling into each interval. Given the outcomes of

the DQ process, we obtain a meaningful new representa-

tion of our training data X in the form of F = {f

1

, ..., f

n

},

where each feature is described by a vocabulary of few to-

kens. In other words, the derived feature representation f

i

comes from x

i

where each value is assigned to a token T .

According to this formulation, a strong discriminative fea-

ture will be intuitively associated to a descriptor f

i

contain-

ing many relatively large tokens (e.g., 5, 6) rather than small

ones (e.g., 1, 2).

3.2. From co-occurrences to graph weighting

Weighting the graph according to the nodes discrimina-

tory power has a great influence on the quality of the rank-

ing process. We designed a framework to automatically per-

form the graph weighting from training data, such that the

learnt parameters can be used to sort features according to

their degrees of relevance or importance.

Our solution is based on a variation of the PLSA [21]

technique, that considers co-occurrences of tokens and fea-

tures, ht, fi, to model the probability of each co-occurrence

as a mixture of conditionally independent multinomial dis-

tributions.

In order to better understand the intuition behind the pro-

posed model, we need to make some assumptions. We as-

sume that a feature consists of only two topics represent-

ing the two main latent variables of any feature selection

algorithms: Relevancy and Irrelevancy. Therefore, we in-

troduce an unobserved class variable Z = {z

1

, z

2

} obtain-

ing a latent variable model for co-occurrence tokens. As

a result, there is a distribution P (z|f) over the fixed num-

ber of topics for each feature f. Similarly, original PLSA

model does not have the explicit specification of this dis-

tribution but it is indeed a multinomial distribution where

P (z|f) represents the probability that topic z appears in fea-

ture f . Fig. 1.(a) shows the general structure of the model,

each feature can be represented as a mixture of concepts

(Relevant/Irrelevant) weighted by the probability P (z|f )

and each token expresses a topic with probability P (t|z).

Fig. 1.(b) describes the generative process for each of the

n features in the set by using plate representation. We can

write the probability a token t appearing in feature f as fol-

lows:

P (t|f ) = P (t|z

1

)P (z

1

|f) + P (t|z

2

)P (z

2

|f).

By replacing this for any feature in the set F we obtain,

P (f ) =

Y

t

n

P (t|z

1

)P (z

1

|f) + P (t|z

2

)P (z

2

|f)

o

.

The unknown parameters of this model are P (t|z) and

P (z|f). As for PLSA, we derived the equation for com-

puting these parameters by maximum likelihood. The log-

likelihood function is given by

L =

X

f

X

t

Q(f, t) log[P (t|f )]

where Q(f, t) is the number of times token t appearing in

feature f . The EM algorithm is used to compute optimal

parameters. The E-step is given by

P (z|f, t) =

P (z)P (f |z)P (t|z)

P (z

1

)P (f |z

1

)P (t|z

1

) + P (z

2

)P (f |z

2

)P (t|z

2

)

,

and the M-step is given by

P (t|z) =

P

f

Q(f, t)P (z|f, t)

P

f,t

0

Q(f, t

0

)P (z|f, t

0

)

,

P (f |z) =

P

t

Q(f, t)P (z|f, t)

P

f

0

,t

Q(f

0

, t)P (z|f

0

, t)

,

P (z) =

P

f,t

Q(f, t)P (z|f, t)

P

f,t

Q(f, t)

.

The responsibility for assigning the “condition of be-

ing relevant” to features lies to a great extent with the un-

observed class variable Z. In particular, we initialize the

model priors P (t|z) in order to link z

1

to the abstract topic

of Relevancy, and hence z

2

to Irrelevancy. By construc-

tion we limited the range of the tokens to values between

1 and 6 (see Sec.3.1), with 1 that behaves the same way

as being the lowest rating for a sample of a particular fea-

ture, and 6 being the highest quality. As a result, a natural

way to initialize these priors is to generate a pair of linearly

spaced vectors assigning a higher probability P (t

0

|Z = z

1

)

for those tokens t

0

which score higher, and consequently the

opposite for P (t

0

|Z = z

2

).

Finally, the graph can be weighted by the estimated prob-

ability distribution P (Z = z

1

|f). According to Eq.1, each

element a

i

j of the adjacency matrix is the joint probability

that the abstract topic of relevancy appears in feature f

i

and

f

j

, namely:

a

ij

= ϕ(~x

i

, ~x

j

) = P (Z = z

1

|f

i

)P (Z = z

1

|f

j

), (4)

where mixing weights P (Z = z

1

|f

i

) and P (Z = z

1

|f

j

) are

conditionally independent. Indeed, knowledge of whether

P (Z = z

1

|f

i

) occurs provides no information on the like-

lihood of P (Z = z

1

|f

j

) occurring, and knowledge of

whether P (Z = z

1

|f

j

) occurs provides no information on

the likelihood of P (Z = z

1

|f

i

) occurring.

3.3. Probabilistic Infinite Feature Selection

Let γ = {v

0

= i, v

1

, ..., v

l−1

, v

l

= j} denote a path

of length l between nodes i and j, that is, features ~x

i

and

~x

j

, through other nodes v

1

, ..., v

l−1

. For simplicity, sup-

pose that the length l of the path is lower than the total

number of nodes n in the graph. In this setting, a path is

simply a subset of the available features/nodes that come

into play. Moreover, the network is characterized by walk

structure [6], where nodes and edges can be visited multiple

times.

We can then estimate the joint probability that γ is a good

subset of features as

P

γ

=

l−1

Y

k=0

a

v

k

,v

k+1

. (5)

Let us define the set P

l

i,j

as containing all the paths of

length l between i and j; to account for the energy of all the

paths of length l, we sum them as follows:

C

l

(i, j) =

X

γ∈P

l

i,j

P

γ

, (6)

which, following standard matrix algebra, gives:

C

l

(i, j) = A

l

(i, j),

![Table 2. Feature selection approaches considered in the experiments [29, 27]. The table reports their Type, class (Cl.), complexity (Compl.), and execution times in seconds (Exec.Time). As for the complexity, T is the number of samples, n is the number of initial features, i is the number of iterations in the case of iterative algorithms, and C is the number of classes.](/figures/table-2-feature-selection-approaches-considered-in-the-2in0z9sm.png)