JUNE 2006 IEEE Robotics & Automation Magazine

99

TUTORIAL

Simultaneous Localization

and Mapping: Part I

BY HUGH DURRANT-WHYTE AND TIM BAILEY

T

he simultaneous localization and mapping (SLAM)

problem asks if it is possible for a mobile robot to be

placed at an unknown location in an unknown envi-

ronment and for the robot to incrementally build a consistent

map of this environment while simultaneously determining its

location within this map. A solution to the SLAM problem

has been seen as a “holy grail” for the mobile robotics com-

munity as it would provide the means to make a robot truly

autonomous.

The “solution” of the SLAM problem has been one of the

notable successes of the robotics community over the past

decade. SLAM has been formulated and solved as a theoretical

problem in a number of different forms. SLAM has also been

implemented in a number of different domains from indoor

robots to outdoor, underwater, and airborne systems. At a

theoretical and conceptual level, SLAM can now be consid-

ered a solved problem. However, substantial issues remain in

practically realizing more general SLAM solutions and notably

in building and using perceptually rich maps as part of a

SLAM algorithm.

This two-part tutorial and survey of SLAM aims to provide

a broad introduction to this rapidly growing field. Part I (this

article) begins by providing a brief history of early develop-

ments in SLAM. The formulation section introduces the struc-

ture the SLAM problem in now standard Bayesian form, and

explains the evolution of the SLAM process. The solution sec-

tion describes the two key computational solutions to the

SLAM problem through the use of the extended Kalman filter

(EKF-SLAM) and through the use of Rao-Blackwellized par-

ticle filters (FastSLAM). Other recent solutions to the SLAM

problem are discussed in Part II of this tutorial. The application

section describes a number of important real-world implemen-

tations of SLAM and also highlights implementations where

the sensor data and software are freely down-loadable for other

researchers to study. Part II of this tutorial describes major

issues in computation, convergence, and data association in

SLAM. These are subjects that have been the main focus of

the SLAM research community over the past five years.

History of the SLAM Problem

The genesis of the probabilistic SLAM problem occurred at

the 1986 IEEE Robotics and Automation Conference held in

San Francisco, California. This was a time when probabilistic

methods were only just beginning to be introduced into both

robotics and artificial intelligence (AI). A number of

researchers had been looking at applying estimation-theoretic

methods to mapping and localization problems; these includ-

ed Peter Cheeseman, Jim Crowley, and Hugh Durrant-

Whyte. Over the course of the conference, many paper table

cloths and napkins were filled with long discussions about

consistent mapping. Along the way, Raja Chatila, Oliver

Faugeras, Randal Smith, and others also made useful contri-

butions to the conversation.

The result of this conversation was a recognition that

consistent probabilistic mapping was a fundamental problem

in robotics with major conceptual and computational issues

that needed to be addressed. Over the next few years a

number of key papers were produced. Work by Smith and

Cheesman [39] and Durrant-Whyte [17] established a statis-

tical basis for describing relationships between landmarks and

manipulating geometric uncertainty. A key element of this

work was to show that there must be a high degree of cor-

relation between estimates of the location of different land-

marks in a map and that, indeed, these correlations would

grow with successive observations.

At the same time Ayache and Faugeras [1] were under-

taking early work in visual navigation, Crowley [9] and

Chatila and Laumond [6] were working in sonar-based navi-

gation of mobile robots using Kalman filter-type algorithms.

These two strands of research had much in common and

resulted soon after in the landmark paper by Smith et al.

[40]. This paper showed that as a mobile robot moves

through an unknown environment taking relative observa-

tions of landmarks, the estimates of these landmarks are all

necessarily correlated with each other because of the com-

mon error in estimated vehicle location [27]. The implica-

tion of this was profound: A consistent full solution to the

combined localization and mapping problem would require

a joint state composed of the vehicle pose and every land-

mark position, to be updated following each landmark

observation. In turn, this would require the estimator to

employ a huge state vector (on the order of the number of

landmarks maintained in the map) with computation scaling

as the square of the number of landmarks.

Crucially, this work did not look at the convergence

properties of the map or its steady-state behavior. Indeed, it

1070-9932/06/$20.00©2006 IEEE

IEEE Robotics & Automation Magazine JUNE 2006

100

was widely assumed at the time that the estimated map

errors would not converge and would instead exhibit a ran-

dom-walk behavior with unbounded error growth. Thus,

given the computational complexity of the mapping prob-

lem and without knowledge of the convergence behavior of

the map, researchers instead focused on a series of approxi-

mations to the consistent mapping problem, which assumed

or even forced the correlations between landmarks to be

minimized or eliminated, so reducing the full filter to a

series of decoupled landmark to vehicle filters ([28] and [38]

for example). Also for these reasons, theoretical work on the

combined localization and mapping problem came to a tem-

porary halt, with work often focused on either mapping or

localization as separate problems.

The conceptual breakthrough came with the realization

that the combined mapping and localization problem, once

formulated as a single estimation problem, was actually con-

vergent. Most importantly, it was recognized that the corre-

lations between landmarks, which most researchers had tried

to minimize, were actually the critical part of the problem

and that, on the contrary, the more these correlations grew,

the better the solution. The structure of the SLAM problem,

the convergence result and the coining of the acronym

SLAM was first presented in a mobile robotics survey paper

presented at the 1995 International Symposium on Robotics

Research [16]. The essential theory on convergence and

many of the initial results were developed by Csorba [10],

[11]. Several groups already working on mapping and local-

ization, notably at the Massachusetts Institute of Technology

[29], Zaragoza [4], [5], the ACFR at Sydney [20], [45], and

others [7], [13], began working in earnest on SLAM—also

called concurrent mapping and localization (CML) at this time—

in indoor, outdoor, and subsea environments.

At this time, work focused on improving computational

efficiency and addressing issues in data association, or loop

closure. The 1999 International Symposium on Robotics

Research (ISRR’99) [23] was an important meeting point

where the first SLAM session was held and where a degree

of convergence between the Kalman-filter-based SLAM

methods and the probabilistic localisation and mapping

methods introduced by Thrun [42] was achieved. The

2000 IEEE International Conference on Robotics and

Automation (ICRA) Workshop on SLAM attracted 15

researchers and focused on issues such as algorithmic com-

plexity, data association, and implementation challenges.

The following SLAM workshop at the 2002 ICRA attract-

ed 150 researchers with a broad range of interests and

applications. The 2002 SLAM summer school hosted by

Henrik Christiansen at KTH in Stockholm attracted all the

key researchers together with some 50 Ph.D. students from

around the world and was a tremendous success in building

the field. Interest in SLAM has grown exponentially in

recent years, and workshops continue to be held at both

ICRA and IROS. The SLAM summer school ran in 2004

in Toulouse and will run at Oxford,

England, in 2006.

Formulation and Structure

of the SLAM Problem

SLAM is a process by which a mobile

robot can build a map of an environ-

ment and at the same time use this map

to deduce its location. In SLAM, both

the trajectory of the platform and the

location of all landmarks are estimated

online without the need for any a priori

knowledge of location.

Preliminaries

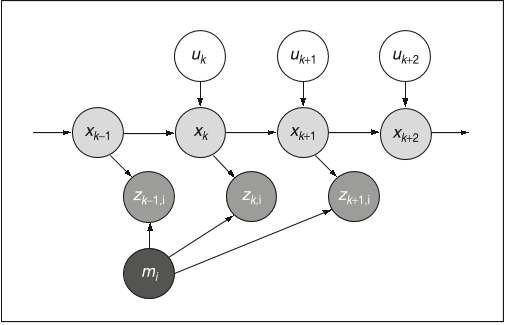

Consider a mobile robot moving through

an environment taking relative observa-

tions of a number of unknown landmarks

using a sensor located on the robot as

shown in Figure 1. At a time instant

k

,

the following quantities are defined:

◆

x

k

: the state vector describing the

location and orientation of the

vehicle

◆

u

k

: the control vector, applied at

time

k − 1

to drive the vehicle to

a state

x

k

at time

k

Figure 1. The essential SLAM problem. A simultaneous estimate of both robot and

landmark locations is required. The true locations are never known or measured

directly. Observations are made between true robot and landmark locations.

x

k

+2

m

j

x

k

x

k

−1

x

k

+1

m

i

z

k

−1,i

z

k,j

u

k

u

k

+1

u

k

+2

Robot

Landmark

Estimated

True

JUNE 2006 IEEE Robotics & Automation Magazine

101

◆

m

i

: a vector describing the location of the ith landmark

whose true location is assumed time invariant

◆

z

ik

: an observation taken from the vehicle of the

location of the ith landmark at time

k

. When there

are multiple landmark observations at any one time or

when the specific landmark is not relevant to the dis-

cussion, the observation will be written simply as

z

k

.

In addition, the following sets are also defined:

◆

X

0:k

={x

0

, x

1

, ··· , x

k

}={X

0:k−1

, x

k

}

: the history of

vehicle locations

◆

U

0:k

={u

1

, u

2

, ··· , u

k

}={U

0:k−1

, u

k

}

: the history of

control inputs

◆

m ={m

1

, m

2

, ··· , m

n

}

the set of all landmarks

◆

Z

0:k

={z

1

, z

2

, ··· , z

k

}={Z

0:k−1

, z

k

}

: the set of all

landmark observations.

Probabilistic SLAM

In probabilistic form, the simultaneous localization and map

building (SLAM) problem requires that the probability dis-

tribution

P(x

k

, m|Z

0:k

, U

0:k

, x

0

)(1)

be computed for all times

k

. This probability distribution

describes the joint posterior density of the landmark locations

and vehicle state (at time

k

) given the recorded observations

and control inputs up to and including time

k

together with

the initial state of the vehicle. In general, a recursive solution

to the SLAM problem is desirable. Starting with an estimate

for the distribution

P(x

k−1

, m|Z

0:k−1

, U

0:k−1

)

at time

k − 1

, the joint posterior, following a control

u

k

and obser-

vation

z

k

, is computed using Bayes theorem. This computa-

tion requires that a state transition model and an observation

model are defined describing the effect of the control input

and observation respectively.

The observation model describes the probability of making an

observation

z

k

when the vehicle location and landmark loca-

tions are known and is generally described in the form

P(z

k

|x

k

, m). (2)

It is reasonable to assume that once the vehicle location and

map are defined, observations are conditionally independent

given the map and the current vehicle state.

The motion model for the vehicle can be described in

terms of a probability distribution on state transitions in

the form

P(x

k

|x

k−1

, u

k

). (3)

That is, the state transition is assumed to be a Markov process

in which the next state

x

k

depends only on the immediately

preceding state

x

k−1

and the applied control

u

k

and is inde-

pendent of both the observations and the map.

The SLAM algorithm is now implemented in a standard

two-step recursive (sequential) prediction (time-update) cor-

rection (measurement-update) form:

Time-update

P(x

k

, m|Z

0:k−1

, U

0:k

, x

0

) =

P(x

k

|x

k−1

, u

k

)

× P(x

k−1

, m|Z

0:k−1

, U

0:k−1

, x

0

)dx

k−1

(4)

Measurement Update

P(x

k

, m|Z

0:k

, U

0:k

, x

0

)

=

P(z

k

|x

k

, m)P(x

k

, m|Z

0:k−1

, U

0:k

, x

0

)

P(z

k

|Z

0:k−1

, U

0:k

)

(5)

Equations (4) and (5) provide a recursive procedure for calcu-

lating the joint posterior

P(x

k

, m|Z

0:k

, U

0:k

, x

0

)

for the

robot state

x

k

and map

m

at a time

k

based on all observa-

tions

Z

0:k

and all control inputs

U

0:k

up to and including time

k

. The recursion is a function of a vehicle model

P(x

k

|x

k−1

, u

k

)

and an observation model

P(z

k

|x

k

, m)

.

It is worth noting that the map building problem may be

formulated as computing the conditional density

P(m|X

0:k

, Z

0:k

, U

0:k

)

. This assumes that the location of the

vehicle

x

k

is known (or at least deterministic) at all times, sub-

ject to knowledge of initial location. A map

m

is then con-

structed by fusing observations from different locations.

Conversely, the localization problem may be formulated as

computing the probability distribution

P(x

k

|Z

0:k

, U

0:k

, m)

.

This assumes that the landmark locations are known with cer-

tainty, and the objective is to compute an estimate of vehicle

location with respect to these landmarks.

Structure of Probabilistic SLAM

To simplify the discussion in this section, we will drop the

conditioning on historical variables in (1) and write the

required joint posterior on map and vehicle location as

P(x

k

, m|z

k

)

and even

P(x

k

, m)

as the context permits.

The observation model

P(z

k

|x

k

, m)

makes explicit the

dependence of observations on both the vehicle and landmark

locations. It follows that the joint posterior cannot be parti-

tioned in the obvious manner

P(x

k

, m|z

k

) = P(x

k

|z

k

)P(m|z

k

),

and indeed it is well known from the early papers on

consistent mapping [17], [39] that a partition such as this

leads to inconsistent estimates. However, the SLAM

problem has more structure than is immediately obvious

from these equations.

Referring again to Figure 1, it can be seen that much of

the error between estimated and true landmark locations is

common between landmarks and is in fact due to a single

source; errors in knowledge of where the robot is when land-

mark observations are made. In turn, this implies that the

errors in landmark location estimates are highly correlated.

Practically, this means that the relative location between any

two landmarks,

m

i

− m

j

, may be known with high accuracy,

even when the absolute location of a landmark

m

i

is quite

uncertain. In probabilistic form, this means that the joint

probability density for the pair of landmarks

P(m

i

, m

j

)

is

highly peaked even when the marginal densities

P(m

i

)

may

be quite dispersed.

The most important insight in SLAM was to realize that

the correlations between landmark estimates increase monoton-

ically as more and more observations are made. (These results

have only been proved for the linear Gaussian case [14]. For-

mal proof for the more general probabilistic case remains an

open problem.) Practically, this means that knowledge of the

relative location of landmarks always improves and never

diverges, regardless of robot motion. In probabilistic terms,

this means that the joint probability density on all landmarks

P(m)

becomes monotonically more peaked as more observa-

tions are made.

This convergence occurs because the observations made by

the robot can be considered as “nearly independent” mea-

surements of the relative location between landmarks. Refer-

ring again to Figure 1, consider the robot at location

x

k

observing the two landmarks

m

i

and

m

j

. The relative loca-

tion of observed landmarks is clearly independent of the coor-

dinate frame of the vehicle, and successive observations from

this fixed location would yield further independent measure-

ments of the relative relationship between landmarks. Now, as

the robot moves to location

x

k+1

, it again observes landmark

m

j

this allows the estimated location of the robot and land-

mark to be updated relative to the previous location

x

k

. In

turn, this propagates back to update landmark

m

i

—even

though this landmark is not seen from the new location. This

occurs because the two landmarks are highly correlated (their

relative location is well known) from previous measurements.

Further, the fact that the same measurement data is used to

update these two landmarks makes them more correlated. The

term nearly independent measurement is appropriate because the

observation errors will be correlated through successive vehi-

cle motions. Also note that in Figure 1 at location

x

k+1

, the

robot observes two new landmarks relative to

m

j

. These new

landmarks are thus immediately linked or correlated to the

rest of the map. Later updates to these landmarks will also

update landmark

m

j

and through this landmark

m

i

and so

on. That is, all landmarks end up forming a network linked by

relative location or correlations whose precision or value

increases whenever an observation is made.

This process can be visualized (Figure 2) as a network of

springs connecting all landmarks together or as a rubber sheet

in which all landmarks are embedded. An observation in a

neighborhood acts like a displacement to a spring system or

rubber sheet such that its effect is great in the neighborhood

and, dependent on local stiffness (correlation) properties,

diminishes with distance to other landmarks. As the robot

moves through this environment and takes observations of

the landmarks, the springs become increasingly (and monoto-

nically) stiffer. In the limit, a rigid map of landmarks or an

accurate relative map of the environment is obtained. As the

map is built, the location accuracy of the robot measured rel-

ative to the map is bounded only by the quality of the map

and relative measurement sensor. In the theoretical limit,

robot relative location accuracy becomes equal to the local-

ization accuracy achievable with a given map.

Solutions to the SLAM Problem

Solutions to the probabilistic SLAM problem involve find-

ing an appropriate representation for both the observation

model (2) and motion model (3) that allows efficient and

consistent computation of the prior and posterior distribu-

tions in (4) and (5). By far, the most common representa-

tion is in the form of a state-space model with additive

Gaussian noise, leading to the use of the extended Kalman

filter (EKF) to solve the SLAM problem. One important

alternative representation is to describe the vehicle motion

model in (3) as a set of samples of a more general non-

Gaussian probability distribution. This leads to the use of

the Rao-Blackwellized particle filter, or FastSLAM algo-

rithm, to solve the SLAM problem. While EKF-SLAM and

FastSLAM are the two most important solution methods,

newer alternatives, which offer much potential, have been

proposed, including the use of the information-state form

[43]. These are discussed further in Part II of this tutorial.

Figure 2. Spring network analogy. The landmarks are connected

by springs describing correlations between landmarks. As the

vehicle moves back and forth through the environment, spring

stiffness or correlations increase (red links become thicker). As

landmarks are observed and estimated locations are corrected,

these changes are propagated through the spring network.

Note, the robot itself is correlated to the map.

Estimated Robot

Estimated Landmark

Correlations

IEEE Robotics & Automation Magazine JUNE 2006

102

JUNE 2006 IEEE Robotics & Automation Magazine

103

EKF-SLAM

The basis for the EKF-SLAM method is to describe the vehi-

cle motion in the form

P(x

k

|x

k−1

, u

k

) ⇐⇒ x

k

= f(x

k−1

, u

k

) + w

k

,(6)

where

f(·)

models vehicle kinematics and where

w

k

are addi-

tive, zero mean uncorrelated Gaussian motion disturbances

with covariance

Q

k

. The observation model is described in

the form

P(z

k

|x

k

, m) ⇐⇒ z

k

= h(x

k

, m) + v

k

,(7)

where

h(·)

describes the geometry of the observation and

where

v

k

are additive, zero mean uncorrelated Gaussian

observation errors with covariance

R

k

.

With these definitions, the standard EKF method [14], [31]

can be applied to compute the mean

ˆx

k|k

ˆm

k

= E

x

k

m

|Z

0:k

,

and covariance

P

k|k

=

P

xx

P

xm

P

T

xm

P

mm

k|k

= E

x

k

−ˆx

k

m −ˆm

k

x

k

−ˆx

k

m −ˆm

k

T

| Z

0:k

of the joint posterior distribution

P(x

k

, m|Z

0:k

, U

0:k

, x

0

)

from:

Time-update

ˆx

k|k−1

= f( ˆx

k−1|k−1

, u

k

)(8)

P

xx,k|k−1

=∇fP

xx,k−1|k−1

∇f

T

+ Q

k

,(9)

where

∇f

is the Jacobian of

f

evaluated at the estimate

ˆx

k−1|k−1

. There is generally no need to perform a time-

update for stationary landmarks. (A time-update, however, is

necessary for landmarks that may move [44].)

Observation-update

ˆx

k|k

ˆm

k

=

[

ˆx

k|k−1

ˆm

k−1

]

+ W

k

[z

k

− h(ˆx

k|k−1

, ˆm

k−1

)]

(10)

P

k|k

= P

k|k−1

− W

k

S

k

W

T

k

,(11)

where

S

k

=∇hP

k|k−1

∇h

T

+ R

k

W

k

= P

k|k−1

∇h

T

S

−1

k

and where

∇h

is the Jacobian of

h

evaluated at

ˆx

k|k−1

and

ˆm

k−1

.

This EKF-SLAM solution is very well known and inherits

many of the same benefits and problems as the standard EKF

solutions to navigation or tracking problems. Four of the key

issues are briefly discussed next.

Convergence

In the EKF-SLAM problem, convergence of the map is

manifest in the monotonic convergence of the determinant

of the map covariance matrix

P

mm,k

and all landmark pair

submatrices, toward zero. The individual landmark variances

converge toward a lower bound determined by initial uncer-

tainties in robot position and observations. The typical con-

vergence behavior of landmark location variances is shown in

Figure 3 (from [14]).

Computational Effort

The observation update step requires that all landmarks and

the joint covariance matrix be updated every time an observa-

tion is made. Naively, this means computation grows quadrat-

ically with the number of landmarks. There has been a great

deal of work undertaken in developing efficient variants of

the EKF-SLAM solution and real-time implementations with

many thousands of landmarks have been demonstrated [21],

[29]. Efficient variants of the EKF-SLAM algorithm are dis-

cussed in Part II of this tutorial.

Figure 3. The convergence in landmark uncertainty. The

plot shows a time history of standard deviations of a set of

landmark locations. A landmark is initially observed with

uncertainty inherited from the robot location and observation.

Over time, the standard deviations reduce monotonically to a

lower bound. New landmarks are acquired during motion

(from [14]).

40 50 60 70 80 90 100 110

0

0.5

1

1.5

2

2.5

Time (s)

Standard Deviation in

X

(

m

)

![Figure 6. A real-time SLAM visualization by Newman et al. [37].](/figures/figure-6-a-real-time-slam-visualization-by-newman-et-al-37-311kteel.png)

![Figure 3. The convergence in landmark uncertainty. The plot shows a time history of standard deviations of a set of landmark locations. A landmark is initially observed with uncertainty inherited from the robot location and observation. Over time, the standard deviations reduce monotonically to a lower bound. New landmarks are acquired during motion (from [14]).](/figures/figure-3-the-convergence-in-landmark-uncertainty-the-plot-2849h61l.png)