1178 IEEE TRANSACTIONS ON NEURAL NETWORKS, VOL. 13, NO. 5, SEPTEMBER 2002

A CMOS Feedforward Neural-Network Chip

With On-Chip Parallel Learning for Oscillation

Cancellation

Jin Liu, Member, IEEE, Martin A. Brooke, Member, IEEE, and Kenichi Hirotsu, Member, IEEE

Abstract—This paper presents a mixed signal CMOS feedfor-

ward neural-network chip with on-chip error-reduction hardware

for real-time adaptation. The chip has compact on-chip weighs ca-

pable of high-speedparallel learning;the implemented learning al-

gorithm is a genetic random search algorithm—the random weight

change (RWC) algorithm. Thealgorithm does not require a known

desired neural-network output for error calculation and is suit-

able for direct feedback control. With hardware experiments, we

demonstrate that the RWC chip, as a direct feedback controller,

successfully suppresses unstable oscillations modeling combustion

engine instability in real time.

Index Terms—Analog finite impulse response (FIR) filter, direct

feedback control, neural-network chip, parallel on-chip learning,

oscillation cancellation.

I. INTRODUCTION

O

RIGINALLY, most neural networks are implemented

by software running on computers. However, as neural

networks gain wider acceptance in a greater variety of applica-

tions, it appears that many practical applications require high

computational power to deal with the complexity or real-time

constraints. Software simulations on serial computers cannot

provide the computational power required, since they transform

the parallel neural-network operations into serial operations.

When the networks become larger, the software simulation

time increases accordingly. With multiprocessor computers,

the number of processors typically available does not compare

with the full parallelism of hundreds, thousands, or millions

of neurons in most neural networks. In addition, software

simulations are run on computers, which are usually expensive

and cannot always be affordable.

As a solution to the above problems, dedicated hardware is

purposely designed and manufactured to offer a higher level of

parallelism and speed. Parallel operations can potentially pro-

vide high computationalpower at a limited cost, thus, can poten-

tially solve a complex problem in a short time period, compared

Manuscript received October 25, 2000; revised July 20, 2001 and January 24,

2002. This work was supported by the Multidisciplinary University Research

Initiative (MURI) on Intelligent Turbine Engines (MITE) Project, DOD-Army

Research Office, under Grant DAAH04-96-1-0008.

J. Liu is with the Department of Electrical Engineering, the University of

Texas at Dallas, Richardson, TX 75080 USA.

M. A. Brooke is with the Department of Electrical and Computer Engi-

neering, Georgia Institute of Technology, Atlanta, GA 30332 USA.

K. Hirotsu is with the Sumitomo Electric Industries, Ltd., Osaka 541-0041,

Japan.

Publisher Item Identifier S 1045-9227(02)05565-0.

with serial operations. However, reported implementations of

neural networks do not always exploit the parallelism.

A common principle for allhardware implementationsis their

simplicity. Mathematical operations that are easy to implement

in software might often be very burdensome in the hardware

and therefore more costly. Hardware-friendly algorithms are es-

sential to ensure the functionality and cost effectiveness of the

hardware implementation. In this research, a hardware-friendly

algorithm, called random-weight-change (RWC) algorithm [1],

is implemented on CMOS processes. The RWC algorithm is a

fully parallel rule that is insensitive to circuit nonidealities. In

addition, the error can be specified such that minimizing the

error leads the system to reach its desired performance and it

is not necessary to calculate the error by comparing the ac-

tual output of the neural network with the desired output of the

neural network. This enables the RWC chip to operate as a di-

rect feedback controller for real-time control applications.

In the last decade, research has demonstrated that on-chip

learning is possible on small problems, like

XOR problems. In

this paper, a fully parallel learning neural-network chip is ex-

perimentally tested to operate as an output direct feedback con-

troller suppressing oscillations modeling combustioninstability,

which is a dynamic nonlinear real-time system.

II. I

SSUES ON THE DESIGN OF LEARNING

NEURAL-NETWORK HARDWARE

Neural networks can be implemented with software, digital

hardware, or analog hardware [2]. Depending on the applica-

tion nature, cost requirements, and chip size limitations due to

manufacturability, each ofthe implementation techniqueshas its

advantages and disadvantages. The implementations of on-chip

learning neural-network hardware differ in three main aspects:

the learning algorithm, the synapse or weigh circuits, and the

activation function circuits.

A. Learning Algorithm

The learning algorithms are associated with the specific

neural-network architectures. This work focuses on the widely

used layered feedforward neural-network architecture. Among

the different algorithms associated with this architecture,

the following algorithms have been implemented in CMOS

integrated circuits: the backpropagation (BP) algorithm, the

chain perturbation rule, and the random weight change rule.

The BP algorithm requires precise implementation of the

computing units, like adders, multipliers, etc. It is very sen-

1045-9227/02$17.00 © 2002 IEEE

LIU et al.: A CMOS FEEDFORWARD NEURAL-NETWORK CHIP 1179

sitive to analog circuit nonidealities, thus, it is not suitable

for compact mixed signal implementation. Learning rules like

serial-weight-perturbation [3] (or Madaline Rule III) and the

chain perturbation rule [4] are very tolerant of the analog circuit

nonidealities, but they are either serial or partially parallel

computation algorithms, thus are often too slow for real-time

control. In this research, we use the RWC algorithm [1], which

is a fully parallel rule that is insensitive to circuit nonidealities

and can be used in direct feedback control. The RWC algorithm

is defined as follows.

For the system weights

If the error is decreased

If the error is increased

where is either or with equal probability,

is a small quantity that sets the learning rate, and and

are the weight and weight change of th synapse at the

th iteration. All the weights adapt at the same time in each

weight adaptation cycle.

Previously, it has been shown with simulations that a modi-

fied RWC algorithm can identify and control an inductor motor

[5]. Further simulation-based research has shown that the RWC

algorithm is immune to analog circuit nonidealities [6]. An ex-

ample of analog circuit nonidealities is the nonlinearity and

offset in the multiplier, as will be shown in the following sec-

tion. Replacing the ideal multiplier with the nonlinear multiplier

constructed from the measurement result of an integrated cir-

cuit implementation of the multiplier, we redo the simulations

on identifying and controlling an inductor motor. The results of

both conditions are almost identical, with minor difference in

initial the learning process [6].

B. Synapse Circuits

Categorized by storage types, there are five kinds of synapse

circuits: capacitor only [1], [7]–[11], capacitor with refreshment

[12]–[14], capacitor with EEPROM [4], digital [15], [16], and

mixed D/A [17] circuits.

Capacitor weights are compact and easy to program, but

they have leakage problems. Leakage current causes the weight

charge stored in the capacitor to decay. Usually, the capacitors

have to be designed large enough (around 20 pF for room

temperature decay in seconds) to prevent unwanted weight

value decay. Capacitor weights with refreshment can solve

leakage problem, but they need off chip memory. In addition,

the added A/D and D/A converters either make the chip large or

result in slow serial operation. EEPROM weights are compact

nonvolatile memories (permanent storage), but they are process

sensitive and hard to program. Digital weights are usually large,

requiring around 16-bit precision to implement BP learning.

The mixed D/A weight storage is a balanced solution when

permanent storage is necessary.

For this research, the chip is to operate in conditions where

the system changes continuously and so weight leakage prob-

lems are mitigated by continuous weight updates. Thus, the chip

described here uses capacitor as weight storage. The weight re-

tention time is experimentally found to be around 2 s for loosing

1% of the weight value at room temperature.

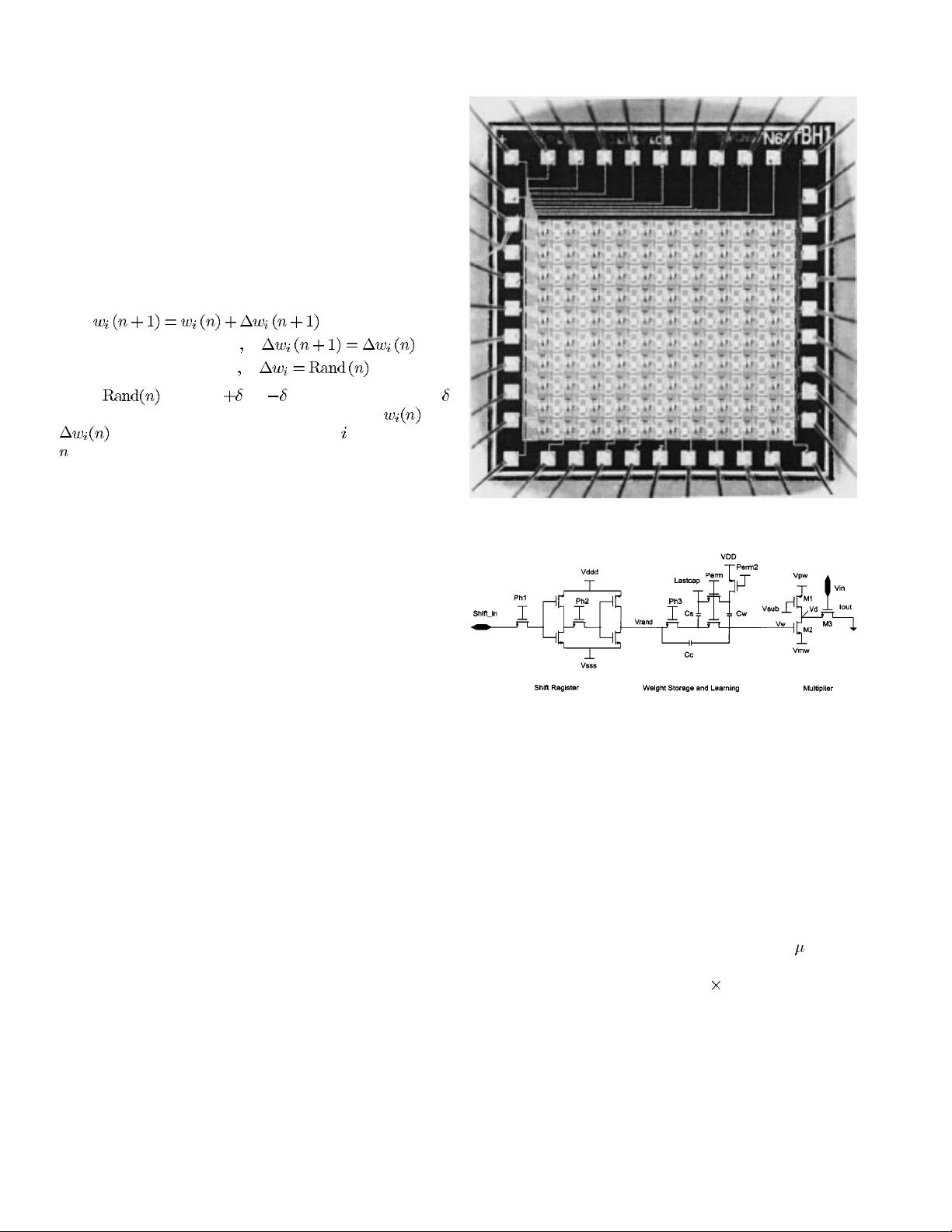

Fig. 1. Chip photo.

Fig. 2. Schematic of a weight cell.

C. Activation Function Circuits

Research [11], [18] shows that the nonlinearity used in

neural-network activation functions can be replaced by multi-

plier nonlinearity. In this work, since the weight multiplication

circuit has nonlinearity, we uses a linear current to voltage

converter with saturation to implement the activation function.

III. C

IRCUIT DESIGN

A. Chip Architecture

The chipwas fabricatedthrough MOSIS inOrbit 2-

m n-well

process. Fig. 1 shows a photomicrograph of the 2 mm on a side

chip. It contains 100 weights in a 10

10 array and has ten

inputs and ten outputs. The input pads are located at the right

side of the chip, and the output pads are located at the bottom

side of the chip. The pads at the top and left sides of the chip are

used for voltage supplies and control signals. This arrangement

makes it possible for the chip to be cascaded into multilayer

networks.

The schematic of one weight cell is shown in Fig. 2. The left

part is a digital shift register for shifting in random numbers.

The right part is a simple multiplier. The circuits in the middle

are the weight storage and weight modification circuits.

1180 IEEE TRANSACTIONS ON NEURAL NETWORKS, VOL. 13, NO. 5, SEPTEMBER 2002

Fig. 3. HSPICE simulation result on the adjustment of a weight value.

The shift registers of all the cells are connected as a chain,

therefore, only one random bit needs to be fed into the chip at

a time. At a given time, each cell sees a random number at the

output of the shift register, being either “1” or “0.” If it is “1,”

the voltage

is equal to ; if it is a “0,” the voltage is

equal to

.

B. Weight Storage and Adaptation Circuits

The weight charge is stored in the larger capacitor

, with

representing the weight value. Switching clock Ph3 on,

while clock perm is off, loads the smaller capacitor

with a

small amount of charge. Then, connecting

in parallel with

the smaller capacitor

changes the weight value. Suppose

that the voltage across

is and the voltage across is

before connecting them in parallel, after connecting them

in parallel for charge sharing, the final voltages across them

are the same, supposed to be

. The total charge carried

over the two capacitors does not change,

, thus the new voltage across ,

will be

.

In this implementation, the

is 100 times of , thus

. So, every time, the weight value

changes approximately by 1% of the voltage across

.How-

ever, the change is nonlinear, due to the weight decay term,

in the above equation. The bottom plate of will be

charged to

, which will be either or . The top

plate of

is connected to a bias voltage Lastcap. The values

of

, and Lastcap together control the step size of

weight change. The

is an external biasing to set the range

of the actual weight value, which is the sum of the value of

and the charge across . Clock perm2 has a complementary

phase of clock perm.

An individual weight will have its value either increased or

decreased every time the clock Ph3 is activated. When the data

shifted into the weight cell is a “1” (5 v), the weight is increased;

and when the data shifted in is a “0” (0 v), the weight is de-

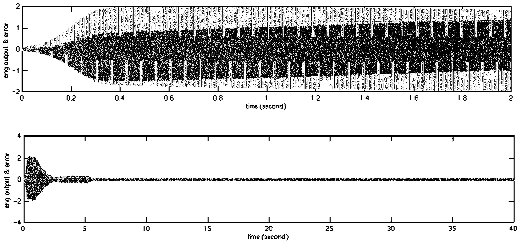

creased. Fig. 3 shows the results of an HSPICE simulation of

the weight changing with time. In the simulation, from the time

0 to 200 ms, a series of “1s” are shifted into the cell. Clock Ph3

is activated to allow the random number to be added to the per-

manent weight change; clock perm turns on and off to make

permanent change on the weight value. Clocks Ph3 and perm

have complimentary phases with period of 2 ms. As a result, the

weight keeps on incrementing for 100 times during the 200 ms

period. From time 200 to 400 ms, a series of “0’s” are shifted to

the cell, so the weight keeps on decrementing. The same process

repeats for several cycles in the simulation. The first two cycles

Fig. 4. Measured result on the adjustment of a weight value.

are shown in the figure, the rest of the cycles are identical to the

second cycle.

The weight increment and decrement rates are determined by

the values of

, and Lastcap, as mentioned earlier. In

this simulation,

is 5 V, is 0 V, and Lastcap is 2.5

V. When a “0” is shifted in,

equals to 0 V; when a “1”

is shifted in,

equals to 5 V. However, the voltage at the

bottom plate of

does not always equal to exactly, due

to the NMOS switching gate controlled by Ph3, which is 5 V.

Suppose the threshold voltage of the switching gate is 0.7 V, the

voltage at the bottom plate of

equals to 4.3 V when

equals to 5 V, and equals to 0 V when equals to 0 V. Thus,

the increment step is approximately 0.018 V while the decre-

ment step is 0.025 V, corresponding to about 7

-bit resolu-

tion.

Fig. 4 shows the measurement result of the weight increment

and decrement, for comparison with the simulated result shown

in Fig. 3. The shift

in data are series of “0s” and “1s.” In this

measurement, the three voltages controlling the weight incre-

ment and decrement step size are adjusted so that the up slope

and the down slope are almost symmetrical.

The following scheme implements the RWC learning. If the

calculated error decreases, clocks Ph1 and Ph2 stop. The same

random number, representing the same weight change direction,

will be used to load

with the charge, thus, the weights change

in the same direction. If the error is increased, clocks Ph1 and

Ph2 are turned on, a new random bit will be shifted in, resulting

a random change of the weight values.

C. Multiplier Circuits

The operation of the multiplier, whose schematic is shown in

Fig. 2, is as follows. The voltage

is the substrate voltage,

which is the most negative voltage among all the biasing volt-

ages. In the simulation and experiments, we use complimentary

power supplies, i.e.,

. The output of the multi-

plier is a current flowing into a fixed voltage, which should be

LIU et al.: A CMOS FEEDFORWARD NEURAL-NETWORK CHIP 1181

Fig. 5. HSPICE simulation result on the multiplication function.

Fig. 6. Measured result on the multiplier output current range.

in the middle of and ; in this case, it is ground. The

weight voltage

is added at the gate of M2. Fig. 5 shows the

HSPICE simulation result of the multiplier. The horizontal axis

is the input voltage

, the vertical axis is the output current

, and different curves represent different weight voltage

values

. The multiplier attempts to produce a multiplying

relationship as follows:

. When is about 2

V, the drain of M2

is about 0 V; when is below 2 V,

is positive and when is above 2 V, is negative. The range

of

is small to ensure that M3 is operated in the nonsaturation

region, thus the output current of M3 is approximately propor-

tional to drain-source voltage,

. Depending on the polarity

of

, the output current can flow in both directions and is

defined as follows:

where is the threshold voltage of M3. The above equation

explains why the simulated multiplier has both offset and non-

linearity. The nonlinear relationship is actually desirable as it

eliminates the need for a nonlinear stage following the multi-

pliers, as discussed earlier.

Hardware test results, presented in Fig. 6, show that the mea-

sured multiplier function is close to the HSPICE simulation re-

sult. The two lines are constructed from the measured points

when the weight is programmed to be at its maximum and min-

imum. The horizontal axis is input voltage, with units of V and

the vertical axis is current, with units of

A.

IV. L

EARNING PROCESS

In the learning, a permanent change is made every time a new

pattern is shifted in. If the change makes the error decrease,

the weights will keep on changing in the same direction in the

following iterations, until the error is increased. If the change

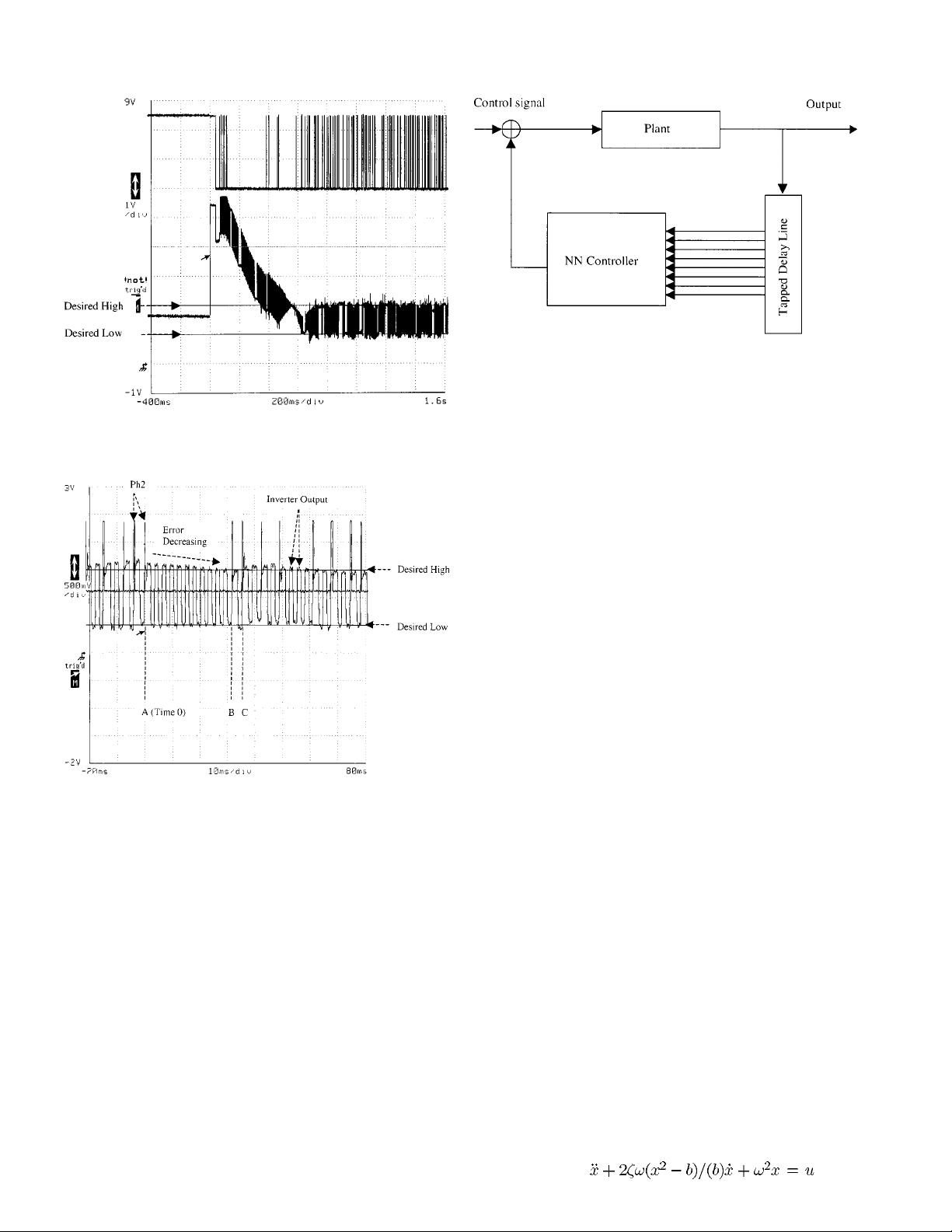

Fig. 7. Test setup for the inverter experiment.

makes the error to increase, the weights keep this change, and

try on a different change for the next iteration.

The test setup shown in Fig. 7 is used to demonstrate the

random-weight-change learning process. The task is to train a

two-input–one-output network to implement an inverter. It is

configured so that one input is always held high as the reference,

while the second one alternates between high and low. The de-

sired output is the inverse of the second input.

In the test, the high and low are set to two voltage values for

the networkoutputs to reach.The network thenis trained tomin-

imize an error signal, which is calculated as follows. Suppose

that the desired output values of high and low are

and ,

and the actual output values of high and low are

and , the

error is calculated as

.

Thus, when the error is small enough, the network implements

an inverter. The error is not calculated on current chip. Rather,

it is calculated on PC in the test setup and is sent to the chip, as

a 1-bit digital signal. However, the error calculation can be in-

corporated on the same chip, with additional digital circuits for

error calculation.

The desired low and high output voltages, in this experiment,

are 1 and 2 V. So, the desired output should oscillate between 1

and 2 V. Fig. 8 shows a typical initial learning process captured

from the oscilloscope. The figure shows that, within 0.8 s, the

network is trained to behave as an inverter with the specified

high and low output voltages.

After the initial training, the network convergesto the inverter

function. Then, the network tries to maintain the performance

as an inverter by continuously adjusting itself. Fig. 9 shows a

100-ms time slice of the continuously adjusting process; the de-

sired high and low output voltages are 1.5 and 0.5 V for this

case. There are two signals in the plot. One of them is the ph2

clock, which is represented by the spikes shown in the figure.

A high of ph2 means that a new random number is sifted in

and indicates that the error starts to increase. The other signal

is the inverter output. It oscillates between high and low, since

the input alternates between low and high. The two horizontal

markers indicate the desired high and low voltages.

Starting from point A (time 0), indicated by the trigger arrow,

clock ph2 is high, thus, a new random pattern is introduced.

From point A to point B, the output signal oscillates between

high and low, converging to the desired high and low values.

1182 IEEE TRANSACTIONS ON NEURAL NETWORKS, VOL. 13, NO. 5, SEPTEMBER 2002

Fig. 8. Oscilloscope screen capture of the initial learning process for the

inverter experiment, with the desired low and high voltages as 1 and 2 V.

Fig. 9. Oscilloscope screen capture of the detailed learning process of the

inverter experiment.

According to the error calculation equation, the error decreases.

During this process, clock Ph2 stops to let the network keep

on using the weight change, which is consistent with the algo-

rithm. The error decreases until point B, when the error starts

to increase. Thus, the network stops using this weight change

direction and tries a new pattern, indicated by a spike of ph2 at

point B. Unfortunately, this pattern cause the error to increase;

the network gives up this direction pattern and tries a new one,

indicated by another spike at point C. As this process goes on,

the network dynamically maintains its performance as an in-

verter by continuously adjusting its weights.

The above experimental results show that the recorded

hardware learning process complies with the random weight

change algorithm and the weights of neural-network chip can

be trained in real time for the neural network to implement

simple functions. Next, we apply the chip to a more compli-

cated application—direct feedback control for combustion

oscillation cancellation.

Fig. 10. Direct feedback control scheme with a neural-network controller.

V. C OMBUSTION INSTABILITY AND

DIRECT

FEEDBACK CONTROL

The combustion system is a dynamic nonlinear system, with

randomly appearingoscillations of differentfrequencies and un-

stable damping factors. When no control is applied, this system

is unstable and eventually reaches a bounded oscillation state.

The goal of the control is to suppress the oscillation. There are

several well-known passive approaches for reducing the insta-

bilities [19], [20]. However, the implementation of such passive

approaches is high cost and time consuming, and they often fail

to adequately damp the instability. The effort of developing ac-

tive control systems for damping such instabilities has increased

in recent years. Since the combustion system is a nonlinear

system, the system parameters vary with time and operating

conditions. The active controllers that developed to suppress the

oscillation in fixed modes cannot deal with the unpredicted new

oscillation modes. In addition, the actuation delay presented in

the control loop also causes difficulties for the control.

In this research, we use the neural-network chip for direct

feedback control [21] of the oscillation. The RWC chip has

on-chip learning ability; the weights on the chip are adjusted in

parallel, which enables the chip to adapt fast enough for many

real-time control applications. The adaptation time of each

weight update is about 2 ns. Fig. 10 shows the direct feedback

control scheme with the neural-network chip as controller. The

tapped delay line in control setup is used to sample the plant

output (combustion chamber pressure). In general, a period of

the plant output of the lowest signal frequency is to be covered.

At the same time, the sampling rate of the tap delay line should

also be faster than the Nyquist sampling rate of the highest

frequency component of the plant output. The rule is that the

neural network should be provided enough information on

the plant dynamics. Software simulation [22], [23], using the

setup in Fig. 10, suggests that it is possible to suppress the

combustion oscillation with the direct feedback control scheme

using the neural-network controller with the RWC algorithm.

A. Combustion Model With Continuously Changing

Parameters

In this simulation, the combustion process is modeled by the

limit cycle model:

, where