Minimization of Memory Trac in High-Level Synthesis

David J. Kolson Alexandru Nicolau Nikil Dutt

Department of Information and Computer Science

University of California, Irvine

Irvine, CA 92717-3425

Abstract

This paper presents a new transformation for the

scheduling of memory-access operations in High-Level

Synthesis. This transformation is suited to memory-

intensive applications with synthesized designs contain-

ing a secondary store accessed by explicit instructions.

Such memory-intensive behaviors are commonly observed

in video compression, image convolution, hydrodynamics

and mechatronics. Our transformation removes load and

store instructions which b ecome redundant or unneces-

sary during the transformation of loops. The advantage

of this reduction is the decrease of secondary memory

bandwidth demands. This technique is implemented in

our Percolation-Based Scheduler whichwe used to con-

duct exp eriments on core numerical b enchmarks. Our re-

sults demonstrate a signicant reduction in the number

of memory op erations and an increase in p erformance on

these benchmarks.

1 Intro duction

Traditionally, one of the goals in High-Level Synthesis

(HLS) is the minimization of storage requirements for syn-

thesized designs [5, 7, 15, 19]. As the fo cus of HLS shifts

towards the synthesis of designs for inherently memory-

intensive behaviors [6, 8, 14, 16, 18], memory optimiza-

tion becomes crucial to obtaining acceptable performance.

Examples of such behaviors are abundant in video com-

pression, image convolution, speech recognition, hydrody-

namics and mechatronics. The memory-intensive nature of

these b ehaviors necessitates the use of a secondary store

(e.g., a memory system), since a primary store (e.g., reg-

ister storage) suciently large enough would b e impracti-

cal. This memory is explicitl y addressed in a synthesized

system by memory operations containing indexing func-

tions. However, due to bottlenecks in the access of memory

systems, memory accessing operations must b e eectively

scheduled so as to improve p erformance.

Our strategy for optimizing memory access is to elim-

inate the redundancy found in memory interaction when

scheduling memory operations. Such redundancies can be

found within loop iterations, p ossibly over multiple paths,

This work was supported in part by NSF grant

CCR8704367, ONR grant N0001486K0215 and a UCI Faculty

Research Grant.

as well as across lo op iterations. During loop pip elining

[17, 13] redundancy is exhibited when values loaded from

and/or stored to the memory in one iteration are loaded

from and/or stored to the memory in future iterations. For

example, consider the b ehavior:

for

i

=1 to

N

for

j

=1 to

N

a

[

i

]:=

a

[

i

]+

1

2

(

b

[

i

][

j

1] +

b

[

i

][

j

2])

b

[

i

][

j

]:=

F

(

b

[

i

][

j

])

end

end

The inner lo op would normally require four load and two

store instructions p er iteration. However, after applicatio n

of our transformation, the inner loop contains only one

load and one store.

Previous work in reducing memory accessing balances

load op erations with computation [3]. However, their al-

gorithm only removes redundant loads and only deals with

single-dimensiona l arrays and single ow-of-control. In [6]

a model for access dep endencies is used to optimize mem-

ory system organization. In [16 ] background memory man-

agement is discussed, but no details of an algorithm are

present. Therefore, it is not clear what approach is taken in

determining redundancy removal, nor the general applic-

itivity of the technique. Related work includes the mini-

mization of registers [8, 14], the minimization of the inter-

connection b etween secondary-store and functional units

[11], and the assignment of arrays to storage [18].

Our transformation has many signicant benets. By

eliminating unnecessary memory op erations that occur on

the critical dep endency path through the co de, the perfor-

mance of the resulting schedule can increase dramatically:

the length of the critical path can b e shortened, thus gen-

erating more compact schedules and reducing code size.

Also, due to our transformation's lo cal nature, it integrates

easily into other parallelizin g transformations [4, 13]. An-

other benet is the p ossible savings in hardware due to

the decrease in memory bandwidth requirements and/or

the exploration of more cost-eective implementations.

2 Program Mo del

In our mo del, a program is represented by a control

data-ow graph where each node corresp onds to opera-

tions performed in one time step and the edges between

nodes representow-of-control. Initially, each node con-

tains only one operation. Parallelizing a program involves

the compaction of multiple op erations into one no de (sub-

ject to resource availabil ity).

Memory operations contain an indexing function, com-

posed of a constant base and induction variables (iv's),

and either a

destination

for load operations or a

value

for

store operations

1

. The semantics of a load operation are

that issuing a load reserves the

destination

(local storage)

at issuance time (i.e.,

destination

is unavailable during the

load's latency). For the purpose of dependency analysis on

memory operations, each contains a

symbolic expression

which is a string that formulates the indexing function

without iv's. (During lo op pipelini ng these expressions

must b e updated w.r.t. iv's.)

The

initial analysis

algorithm in Fig. 1 computes ini-

tial program information. Detecting loop invariants and

iv's and building iv use-def chains can be done with stan-

dard algorithms found in [1] and stored into a database.

The function

build symbolic exprs

creates symbolic expres-

sions for each memory operation in the program by getting

the iv denitions that dene the current op eration's index-

ing function and deriving an expression for each. Next, the

base of the memory structure is added to each expression.

An op eration is then annotated with its expression, com-

bining multiple expressions into one of the form \( (

expr1

)

or

...

or

(

exprN

))."

The function

derive expr

constructs the expression

\(LoopId * Const)" if iv is self-referencing (e.g.

i=i+

const

) where Lo opId is the identier of the lo op over which

iv inducts and Const is a constant derived from the con-

stant in the iv operation multiplied by a data size and pos-

sibly other variables and constants

2

. In the introductory

example, the data size for the

j

loop is the array element

size and for the

i

loop is the size of a column (or row) of

data. If iv is dened in terms of another iv (e.g.

i=j+

1

, where

j

is an iv) then recursive calls are made on all

denitions of that other iv. In this case, marking of iv's is

necessary to detect cyclic dependencies which are handled

by a technique called

variable folding

. Essentially variable

folding determines an initial value of a variable on input

to the lo op or resulting from the rst iteration (i.e. val-

ues which are loop-carried are not considered) from the

reverse-ow of the graph. The result can be a constant

or another variable (which is recursively folded, until the

beginning of the lo op is reached).

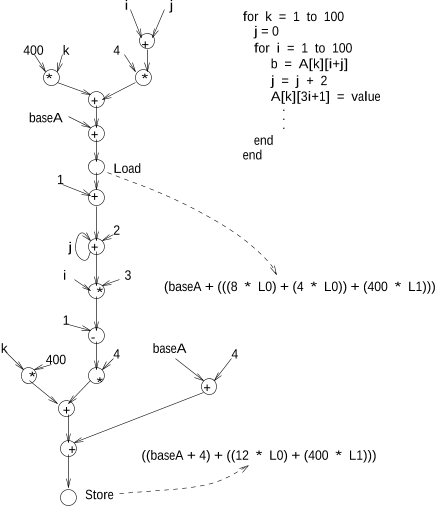

Fig. 2 shows a sample behavior and its CDFG annotated

with symbolic expresssions. The load from

A

builds the

expression \((8 * L0) + (4 * L0))" which is the addition

of 2 (the const for iv

j

) times 4 (the element size) and 1

(the const for iv

i

) times 4. The second lo op over

k

adds

the expression \(400 * L1)." Finally the base address of

A

is added. For the store op eration, the expression \(12 *

1

We use the term

argument

to refer to

destination

if the

operations is a load or to

value

if the operation is a store.

2

For claritywe present a simplied algorithm. More complex

analysis (based on [12]) has been implemented in our scheduler.

Procedure

initial analysis

(program)

begin

/* Detect lo op invariants. */

/* Detect induction variables in program. */

/* Build iv use-def chains in program. */

build symbolic exprs

(program)

end

initial analysis

Procedure

build symbolic exprs

(program)

begin

foreach

mem op

in

program

/* Set iv defs to the possible iv defs found in DB. */

foreach

iv group

in

iv defs

new expr =

derive expr

(iv group)

/* Add Base of mem op to new expr. */

/* Annotate mem op with new expr. */

end

end

end

build symbolic exprs

function

derive expr

(iv group)

begin

foreach

iv

in

iv group

if

(/* iv is marked */)

then

/* Do variable folding. */

else if

(/* iv is self-referencing */)

/* Return the string \(Const * LoopId))" */

else

/* Mark iv, then recursively derive*/

/* the iv that denes this iv. */

end if

end

end

derive expr

Figure 1: Initial program analysis.

L0)" is created which is 1 times 4 times 3 (the constantin

the behavior). Due to the +1 in the index expression, the

constant 4 is added to the base address of

A

.

3 Memory Disambiguation

Memory disambiguation is the ability to determine if

two memory access instructions are

aliases

for the same

location [1]. In our context, we are interested in static

memory disambiguation, or the ability to disambiguate

memory references during scheduling. In the general case,

memory indexing functions can be arbitrarily complex due

to explicit and implicit induction variables and lo op index

increments. Therefore, a simplistic pattern matching ap-

proach to matching loads and stores over lo op iterations

cannot provide the p ower of memory aliasing analysis. For

instance, in the following behavior, if arrays

a

and

b

are

aliases:

for

i

=1 to

N

a

[

i

]:=

1

2

b

[

i

1] +

1

3

a

[

i

2]

C oef

[

i

]:=

b

[

i

]+1

end

pattern matching will fail to nd the redundancy.

In our scheduler, memory disambiguation is based on

the well-known

greatest common divisor

, or GCD test [2].

.

.

.

for k = 1 to 100

j = 0

for i = 1 to 100

b = A[k][i+j]

j = j + 2

A[k][3i+1] = value

end

end

Load

1

+

j+

2

i3

*

1

-

4

*

k

400

+

+

baseA

+

*

4

*

+

ij

400 k

*

+

baseA

+

4

Store

((baseA + 4) + ((12 * L0) + (400 * L1)))

(baseA + (((8 * L0) + (4 * L0)) + (400 * L1)))

Figure 2: Symbolic expressions example.

Performing memory disambiguation on two operations,

op1

and

op2

,involves determining if the dierence equa-

tion:

(op1's symbolic expression) - (op2's symbolic expres-

sion) = 0

has anyinteger solution. Fig. 3 contains an

algorithm to disambiguate two memory references.

This algorithm works by iterating over all expressions

of op erations one and two, thereby testing each possible

address that the two op erations can have. The rst step

in disambiguating two expressions is to convert them into

the sum of pro ducts form \((a * b)+. . . +(y * z))." Next,

operation two's expression is subtracted from op eration

one's. If the resultant expression is not linear then the

disambiguator returns CANT TELL, otherwise the gcd of

coecients of the equation is solved for. If the gcd does

not divide all terms, there is no dependence between

op1

and

op2

.

Returning to the example in Fig. 2, if the load from

iteration

i+1

is overlapped with the store from iteration

i

,

the disambiguator determines that the up dated expression

for the load minus the store's expression is 0, exposing

the redundancy in loading a value which has just b een

computed.

If the disambiguator cannot determine that two mem-

ory operations refer to the same location, we follow the

conservative approach that there is a dependence between

them (i.e., no optimization can b e done). Assertions

(source-level statements such as certain arrays reside in

disjoint memory space, absolute bounds on loops, etc.)

can be used to allay this. Also, providing the user with

the information the dismabiguator has derived and query-

ing for a result to the dep endence question is an alternate,

interactive approach.

function

disambiguate

(op1, op2)

begin

foreach

ex1

in

op1's expressions

foreach

ex2

in

op2's expressions

/* Convert ex1 and ex1 into sum of pro ducts form. */

/* Set expr to ex1 - ex2. */

/* If expr is not linear, return CANT TELL. */

/* Solve GCD of co ecients of expr. */

/* If sol and divides all terms return EQUAL */

/* else return NOT EQUAL. */

end

end

end

disambiguate

Figure 3: Disambiguating memory references.

function

redundant elimination

(op, from step)

begin

if

(INVARIANT(op))

then

status =

remove inv mem op

(op, from step)

/* if op was removed, return REMOVED */

foreach

memory operation, mem op,

in

to

if

(

disambiguate

(op, mem op) == EQUAL)

then

switch

op-mem op

case

load-load:

return

do load load opt

(op, mem op)

case

load-store:

status =

try load store opt

(op, mem op)

/* if op was removed, return REMOVED */

case

store-store:

/* If op's arg and mem op's arg have*/

/* the same reaching defs, delete op, */

/* and update necessary information.*/

return REMOVED

case

store-load:

return

ANTI-DEPENDENCE

end

end if

end

end

redundant elimination

Figure 4: Redundant elimination algorithm.

4 Reducing Memory Trac

Our solution to reducing the amount of memory traf-

c in HLS is to make explicit the redundancy in memory

interaction within the behavior and eliminate those ex-

traneous op erations. Our technique is employed during

scheduling rather than as a pre-pass or post-pass phase; a

pre-pass phase may not remove all redundancy since other

optimizations can create opportunities that may not have

otherwise existed while a p ost-pass phase cannot derive

as compact a schedule since operations eliminated on the

critical path allow further schedule renement.

4.1 Algorithm in Detail

Fig. 4 shows the main algorithm for removing unneces-

sary memory op erations. This function is invoked in our

Percolation-based scheduler [17] by the

move op

transform

(or any suitable lo cal co de motion routine in other sys-

tems) when moving a memory operation into a previous

step that contains other memory operations.

The function

redundant elimination

checks to see if the

memory operation is invariant. If so, then the function

remove inv mem op

tries to remove it. If it is not invari-

ant or could not be removed, then

op

is checked against

each memory operation in the previous step for possible

optimization. If two op erations refer to the same lo cation

then the appropriate action is taken dep ending up on their

types. The load-after-load and load-after-store cases will

be discussed shortly. In the case of a store-after-store, the

rst operation is dead and can be removed if it stores the

same argument as the second and the argument has the

same reaching denitions. Wechoose to simply remove

op

, rather than removing

mem op

and moving op into its

place. For the store-after-load, nothing is done as this is

a false (anti-) dependency that should be preserved for

correctness. Status reecting the outcome is returned, al-

lowing operations to continue to move if no redundancy

was found.

4.1.1 Removing Invariants

Removing invariant memory operations is slightly dier-

ent from general lo op invariant removal. Traditional loop

invariant removal moves an invariantinto a pre-lo op time

step. For load operations this is correct; for store op era-

tions it is not. Conceptually,invariant loads are \inputs"

to the loop, while invariant stores are \outputs." There-

fore, loads must b e placed in pre-loop steps and stores

must b e placed in lo op exit steps.

An algorithm to perform invariant removal appears in

Fig. 5. The conditions necessary for lo op invariant removal

(adapted from [1]) are: 1) the step that op is in must

dominate all lo op exits (i.e., op

must

be executed every

iteration), 2) only one denition of the variable (for loads)

or memory location (for stores) o ccurs in the lo op and 3) no

other denition of the variable or memory location reaches

their users. Additionally, store operations require that the

denition of its argument be the same at the loop exits

so that correctness is preserved. If these conditions are

met, then the operation can b e hoisted out of the lo op. If

condition 2 fails and the op eration is a load, it still mightbe

possible to hoist the operation if a register can be allocated

to the loaded value for the duration of the loop.

4.1.2 Load-After-Load Optimization

The load-after-load optimization is applied in situations

where a load operation accesses a memory value that has

been previously loaded and no intervening modication

has o ccurred to that lo cation's value (i.e. there is no inter-

mittent store). In Fig. 5 the load-after-load optimization is

found. The idea behind this optimization is to insert move

operations into nodes in

mem op

0

s

latency eld which will

transfer the value without re-loading it. As a matter of cor-

rectness, move op erations are only inserted into the no des

function

remove inv mem op

(op, from step)

begin

/* Conditions necessary for hoisting: */

/* 1. from step must dominate all exit nodes. */

/* 2. Only one denition exists. */

/* 3. No other defs reach users. */

/* 4. (stores) Defs of argument are same at loop exits. */

if

(/* conditions met */)

then

/* Move op to pre-loop steps if it's a load */

/* and all p ost-loop steps if it's a store. */

return

REMOVED

end if

return

NO OPT

end

remove inv mem op

function

do load load opt

(op, mem op)

begin

/* set eld to the nodes at the latency of mem op */

foreach

node

in

eld

if

(/* node is reachable byop*/)

then

/* Create move from mem op's arg to the */

/* arg of op. Add this move to no de. */

end if

/* Delete op and up date necessary information.*/

Return

REMOVED

end

do load load opt

Figure 5: Supp orting removal routines.

in

mem op

0

s

latency eld if

op

0

s

denition reaches those

nodes. Finally,

op

is deleted from the program graph and

the local information is up dated.

Although move op erations are introduced into the

schedule, the number of registers used does not increase

(a proof app ears in [9]).

4.1.3 Load-After-Store Optimization

The load-after-store optimization is used to remove a load

operation which accesses a value that a store op eration pre-

viously wrote to the memory. Due to limited resources it

is possible that this optimization cannot b e applied. Con-

sider the partial co de fragment:

Step 1:

a

[

i

]:=

b b

:=

Step 2:

c

:=

a

[

i

]

To eliminate the load

c

:=

a

[

i

], and replace it with the

move operation

c

:=

b

in step 2 would violate program se-

mantics because it introduces a

read-wrong

conict. The

move op eration must be placed in step 1 to guarantee cor-

rect results. However, in this code fragment:

Step 1:

a

[

i

]:=

b c

:=

Step 2:

c

:=

a

[

i

]

d

:=

f

(

c

)

placing a move op eration in step 1 will violate program

semantics b ecause it introduces a

write-live

conict|the

movemust be inserted into step 2. Notice that in b oth

cases, the transformation is still possible, analysis is re-

quired to determine which step is applicabl e.

function

try load store opt

(op, from step, mem op, to step)

begin

node = from step

if

(/* there is a read-wrong conict */)

then

node = to step

end if

if

(/* there is a write-live conict */)

then

if

(/* free cell exists*/)

then

/* Create move of mem op's arg to free cell. */

/* Add moveoptotostep. */

/* Create move of free cell to op's arg. */

/* Add move op to from step */

else

return

NO OPT

else

/* Create move of mem op's arg to op's arg. */

/* Add moveop to node*/

end if

/* Delete op and up date necessary information. */

return

REMOVED

end

try load store opt

Figure 6: Load-After-Store Algorithm.

This optimization might not b e feasible in the following

situation:

Step 1:

a

[

i

]:=

b b

:=

c

:=

Step 2:

c

:=

a

[

i

]

d

:=

f

(

c

)

Semantics are violated by placing

c

:=

b

into either time

step. However, if a free storage cell exists, then the opti-

mization can be done:

Step 1:

a

[

i

]:=

b b

:=

c

:=

e

:=

b

Step 2:

c

:=

e d

:=

f

(

c

)

Therefore, the precise case when the load-after-store opti-

mization fails to remove a redundant load is composed of

three conditions:

1. A move in this step results in a

read-wrong.

2. A move in the previous step results in a

write-live.

3. No free storage cell exists in the previous time step.

In practice, this situation occurs very infrequently.

The load-after-store optimization algorithm is found in

Fig. 6. This algorithm determines which step to place a

move operation. Initially, the step that

op

is in is tried. If

a

read-wrong

conict o ccurs, the previous step is tried. If a

write-live

conict arises, a free cell is necessary to transfer

the value. In this case, twomove operations are added to

the schedule. If a free cell is not available, no optimization

is done. If no conicts o ccur (or they can be alleviated by

switching steps) then a move operation is inserted. Finally,

the load operation is deleted and necessary information

updated.

4.2 Example

Applying our transformation to the b ehavior in the in-

troduction will eliminate the loads of

b[i][j-1]

and

b[i][j-

2]

and the invariant load and store to

a[i]

. During lo op

pipelini ng, the load of

b[i][j-2]

for iteration

j+1

is the same

as

b[i][j-1]

from iteration

j

since (

j

+1)

2=

j

1. For the

invariants the store cannot b e removed unless the load is

also removed. Once the load is hoisted, the store can then

be hoisted as well.

5 Exp eriments and Results

Four memory-intensive b enchmarks were used to study

our transformation: three numerical algorithms (prex

sums, tri-diagonal elimination and general linear recur-

rence equations) which are core routines in many algo-

rithms (as discussed in the introduction) adapated from

[10] and a two-dimensional hydrodynamics implicit com-

putation adapted from [20].

Latencies used for scheduling these b ehaviors were two

steps for add/subtract, three steps for multiply, and ve

steps for load/store. Also, the memory model adopted here

assumed that:

memory ports are homogenous,

each p ort has its own address calculator,

the memory is pip elined with no bank conicts.

With these assumptions, two experiments were conducted.

In the rst, schedules were generated with the number of

memory ports constrained between one and four and

no

functional unit (FU) constraints. Twoschedules were pro-

duced for each b enchmark with the sole dierence b etween

them the application of our transformation. The goal of

this exp erimentwas to isolate the dierence in transformed

schedules without the bias of FU constraints. In the sec-

ond experiment, schedules were generated with one to four

memory ports, two adder units and one multiplier unit.

This experimentwas designed to study performance in the

presence of realistic FU resources.

For each exp eriment, the number of steps in the sched-

ule of the innermost lo op was counted. The GLR equations

benchmark (marked with a

?

) has two loops at the same

innermost nesting level; the results indicate the summa-

tion of the number of steps in both loops. The results

of exp eriments one and two are found in Tables 1 and 2,

respectively. The column labelled \RE" indicates applica-

tion of our transformation. The columns collectively la-

belled \Number of Ports" contain the number of steps in

the innermost loop for the respective FU and memory p ort

parameters.

The results for exp eriment one (Table 1) demonstrate

that this optimization considerably reduces the number

of cycles for the inner lo op. In the prex sums and tri-

diagonal elimination benchmarks, a performance limited

by the latency of a load is achieved with a sucientnum-

ber of p orts. Since a latency of 5 cycles was used for

load operations and not all loads can be eliminated, the

schedule length cannot b e any shorter. The same char-

acteristic is exhibited by the GLR equations b enchmark,

although computational latency causes a longer schedule

length while the hydrodynamics benchmark exhibits im-