Development of Multi-Sensor Global Cloud and Radiance Composites for Earth

Radiation Budget Monitoring from DSCOVR

Konstantin Khlopenkov

*a

, David Duda

a

, Mandana Thieman

a

,

Patrick Minnis

a

, Wenying Su

b

, and Kristopher Bedka

b

a

Science Systems and Applications Inc., Hampton, VA 23666;

b

NASA Langley Research Center, Hampton, VA 23681.

ABSTRACT

The Deep Space Climate Observatory (DSCOVR) enables analysis of the daytime Earth radiation budget via the

onboard Earth Polychromatic Imaging Camera (EPIC) and National Institute of Standards and Technology Advanced

Radiometer (NISTAR). Radiance observations and cloud property retrievals from low earth orbit and geostationary

satellite imagers have to be co-located with EPIC pixels to provide scene identification in order to select anisotropic

directional models needed to calculate shortwave and longwave fluxes.

A new algorithm is proposed for optimal merging of selected radiances and cloud properties derived from multiple

satellite imagers to obtain seamless global hourly composites at 5-km resolution. An aggregated rating is employed to

incorporate several factors and to select the best observation at the time nearest to the EPIC measurement. Spatial

accuracy is improved using inverse mapping with gradient search during reprojection and bicubic interpolation for pixel

resampling.

The composite data are subsequently remapped into EPIC-view domain by convolving composite pixels with the EPIC

point spread function defined with a half-pixel accuracy. PSF-weighted average radiances and cloud properties are

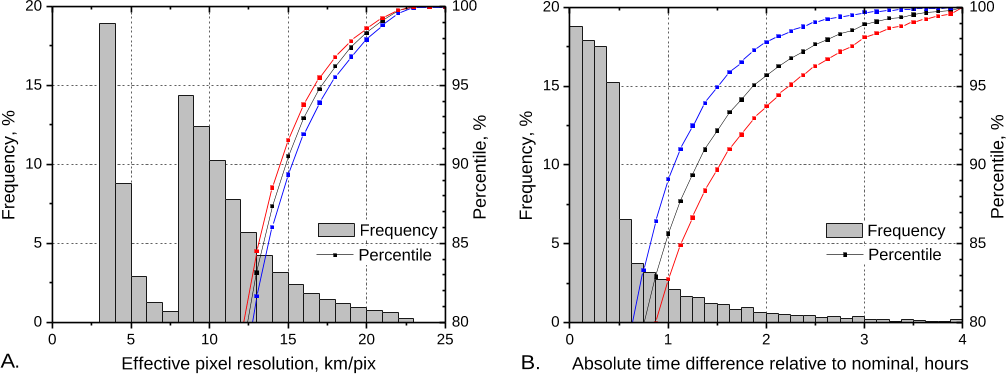

computed separately for each cloud phase. The algorithm has demonstrated contiguous global coverage for any

requested time of day with a temporal lag of under 2 hours in over 95% of the globe.

Keywords: DSCOVR, radiation budget, cloud properties, image processing, composite, subpixel, point spread function.

1. INTRODUCTION

The Deep Space Climate Observatory (DSCOVR) was launched in February 2015 to reach a looping halo orbit around

Lagrangian point 1 (L1) with a spacecraft-Earth-Sun angle varying from 4 to 15 degrees [1, 2]. The Earth science

instruments consist of the Earth Polychromatic Imaging Camera (EPIC) and the National Institute of Standards and

Technology Advanced Radiometer (NISTAR) [3]. The vantage point from L1, unique to Earth science, provides for

continuous monitoring of the Earth’s reflected and emitted radiation and enables analysis of the daytime Earth radiation

budget. This goal implies calculation of the albedo and the outgoing longwave radiation using a combination of

NISTAR, EPIC, and other imager-based products. The process involves, first, deriving and merging cloud properties and

radiation estimates from low earth orbit (LEO) and geosynchronous (GEO) satellite imagers. These properties are then

spatially averaged and collocated to match the EPIC pixels to provide the scene identification needed to select

anisotropic directional models (ADMs). Shortwave (SW) and longwave (LW) anisotropic factors are finally computed

for each EPIC pixel and convolved with EPIC reflectances to determine single average SW and LW factors used to

convert the NISTAR-measured radiances to global daytime SW and LW fluxes [4, 5].

EPIC imager delivers 20482048 pixel imagery in 10 spectral bands, all shortwave, from 317 to 780 nm, while NISTAR

measures the full Earth disk radiance at the top-of-atmosphere (TOA) in three broadband spectral windows: 0.2–100,

0.2–4, and 0.7–4 m. Although NISTAR can provide accurate top-of-atmosphere (TOA) radiance measurements, the

low resolution of EPIC imagery (discussed futher below) and its lack of infrared channels diminish its usefulness in

obtaining details on small-scale surface and cloud properties [6]. Previous studies [7] have shown that these properties

*

konstantin.khlopenkov@nasa.gov; phone 1 757 951-1914; fax 1 757 951-1900; www.ssaihq.com

have a strong influence on the anisotropy of the radiation at the TOA, and ignoring such effects can result in large TOA-

flux errors. To overcome this problem, high-resolution scene identification is derived from the radiance observations and

cloud properties retrievals from LEO (including NASA Terra and Aqua MODIS, and NOAA AVHRR) and from a

global constellation of GEO satellite imagers, which include Geostationary Operational Environmental Satellites

(GOES) operated by the NOAA, Meteosat satellites (by EUMETSAT), and the Multifunctional Transport Satellites

(MTSAT) and Himawari-8 satellites operated by Japan Meteorological Agency (JMA).

The NASA Clouds and the Earth's Radiant Energy System (CERES) [8] project was designed to monitor the Earth’s

energy balance in the shortwave and longwave broadband wavelengths. For the SYN1deg Edition 4 product [9],

1-hourly imager radiances are obtained from 5 contiguous GEO satellite positions (including GOES-13 and -15,

METEOSAT-7 and -10, MTSAT-2, and Himawari-8). The GEO data utilized for CERES was obtained from the Man

computer Interactive Data Access System (McIDAS) [10] archive, which collects data from its antenna systems in near

real time. The imager radiances are then used to retrieve cloud and radiative properties using the CERES Cloud

Subsystem group algorithms [11, 12]. Radiative properties are derived from GEO and MODIS following the method

described in [13] to calculate broadband shortwave albedo, and following a modified version of the radiance-based

approach of [9] to calculate broadband longwave flux. For the 1st generation GEO imagers, the visible (0.65 µm), water

vapor (WV) (6.7 µm) and IR window (11 µm) channels are utilized in the cloud algorithm. For the 2nd generation GEO

imagers, the solar IR (SIR) (3.9µm) and split-window (12 µm) channels are added. In GOES-12 through 15, the split

window is replaced with a CO

2

slicing channel (13.2 µm). The native nominal pixel resolution varies as a function of

wavelength and by satellite. The channel data is sub-sampled to a ~8–10 km/pixel resolution. For MODIS, the algorithm

uses every fourth 1-km pixel and every other scanline and yields hourly datasets of 339 pixels by about 12000 lines. A

long-term cloud and radiation property data product has also been developed using AVHRR Global Area Coverage

(GAC) imagery [13], which has been georeferenced to sub-pixel accuracy [14]. These datasets have 409 pixels by about

12800 lines matching the 4 km/pixel resolution and temporal coverage of the original AVHRR Level 1B GAC data.

This work presents an algorithm for optimal merging of selected radiances and cloud properties derived from multiple

satellite imagers to obtain a seamless global composite product at 5-km resolution. These composite images are

produced for each observation time of the EPIC instrument (typically 300–500 composites per month). In the next step,

for accurate collocation with EPIC pixels, the global composites are remapped into the EPIC-view domain using

geolocation information supplied in EPIC Level 1B data.

2. GENERATION OF GLOBAL GEO/LEO COMPOSITES

The input GEO, AVHRR, and MODIS datasets are carefully pre-processed using a variety of data quality control

algorithms including both automated and human-analyzed techniques. This is especially true of the GEO satellites that

frequently contain bad scan lines and/or other artifacts that result in data quality issues despite the radiance values being

within a physical range. Some of the improvements to the quality of the imager data include detector de-striping

algorithm [15] and an automated system for detection and filtering of transmission noise and corrupt data [16]. The

CERES Clouds Subsystem has pioneered these and other data quality tests to ensure the removal of as many satellite

artifacts as possible.

The global composites are produced on a rectangular latitude/longitude grid with a constant angle spacing of exactly

1/22 degree, which is about 5 km per pixel near the Equator, resulting in 79203960 grid dimensions. Each source data

image is remapped onto this grid by means of inverse mapping, which uses the latitude and longitude coordinates of a

pixel in the output grid for searching for the corresponding sample location in the input data domain (provided that the

latitude and longitude are known for every pixel of the input data). This process employs the concurrent gradient search

described in [17], which uses local gradients of latitude and longitude fields of the input data to locate the sought sample.

This search is very computationally efficient and yields a fractional row/column position, which is then used to

interpolate the adjacent data samples (such as reflectance or brightness temperature) by means of a 66 point resampling

function. At the image boundaries, the missing contents of the 66 window are padded by replicating the edge pixel

values. The image resampling operations are implemented as Lanczos filtering [18] extended to the 2D case with the

parameter a = 3. This interpolation method is based on the sinc filter, which is known to be an optimal reconstruction

filter for band-limited signals, e.g. digital imagery. For discrete input data, such as cloud phase or surface type, the

nearest-neighbor sampling is used instead of interpolation. The described remapping process allows us to preserve as

much as possible the spatial accuracy of the input imagery, which has a nominal resolution of 4–8 km/pix at the satellite

nadir.

After the remapping, the new data are merged with data in the global composite. Composite pixels are replaced with the

new samples only if their quality is lower compared to that of the new data. The quality is measured by a specially

designed rating R, which incorporates five parameters: nominal satellite resolution F

resolution

, pixel time relative to the

EPIC time, viewing zenith angle , distance from day/night terminator F

terminator

, and sun glint factor F

glint

:

2

5.1

glintterminatorresolution

)τ/(1

θcos8.02.0

t

FFFR

(1)

where t is the absolute difference, in hours, between the original observation time of a given sample and the EPIC

observation time. The latter defines the nominal time of the whole composite. If a satellite observation occurred long

before or long after the nominal time, then that data sample is given a lower rating and thus will likely be replaced with

data from another source that was observed closer in time. The characteristic time controls the attenuation rate of the

rating R with increasing time difference. Here = 5 hours is used, which will be justified further below. One can see that

a time difference of 2.8 hours decreases the rating by a factor of about 2. Observations with a time difference of more

than 4 hours have not been processed at all.

Observations with a larger viewing zenith angle (VZA) have a lower spatial resolution, and so the numerator in Equation

(1) reduces the rating accordingly. At a very high VZA, observations may still be usable when there is no alternative

data source to fill in the gap, and therefore the numerator does not decrease to zero. The F

resolution

factor describes a

subjective preference in choosing a particular satellite due to its nominal resolutions or other factors. It is set to 100 for

METEOSAT-7, 220 for MTSAT-1R, -2, and Himawari-8, 210 for all other GEO satellites, 185 for MODIS Terra and

Aqua, and 140 for all NOAA satellites. This is designed to prefer GEO satellites in equatorial and mid-latitude regions

and to help the overall continuity of the composite. Also, AVHRR lacks the water vapor channel (6.7 m), which is

critical for correct retrieval of cloud properties, and therefore AVHRR data are assigned a lower initial rating. The F

glint

factor is designed to reduce the pixel's rating in the vicinity of sun glint and is calculated as follows:

2

2

glint

92.01

1

15.01

b

F

(2)

otherwise92.0

92.0cosifcos

b

and

cosθsinθsinθcosθcosβcos

00

(3)

where

0

is the solar zenith angle and

is the relative azimuth angle.

Finally, the F

terminator

factor is designed to give lower priority to pixels around the day/time terminator, which may have

lower quality of the cloud property retrievals obtained by the daytime algorithm. It is calculated as:

)5.88(cos375.0625.0

0terminator

F

(4)

where

0

is taken in degrees but the argument of cosine is treated as radians and is clipped to the range of […].

Overall, the use of such an aggregated rating allows merging of multiple input factors into a single number that can be

compared and enables higher flexibility in choosing between two candidate pixels. A fixed-threshold approach would be

more difficult to implement for multiple factors, harder to fine-tune and achieve reliable and consistent results, and it

would still cause discontinuities in the final composite. For example, a moderate resolution off-nadir observation may

still be usable when all other candidates occurred too far in time. An opposite situation is also possible. The way all the

factors are accounted for in Equation (1) allows for an optimal compromise solution to this problem.

Once the rating comparison indicates that a pixel in the composite is to be replaced with the input data, all parameters

(already remapped) associated with that particular satellite observation are copied to the composite pixel. A list of those

parameters is shown in Table 1. There are a few limitations here that are worth mentioning. First, the Near-Infrared

(NIR) channel is absent on GEO imagers and so reflectance in the 0.86 m band is flagged as a missing value for

composite pixels that originate from GEO satellites. Similarly, the 6.7 m water vapor band is absent for AVHRR pixels.

On GOES-12, -13, -14, and -15, the split-window brightness temperature (BT) is measured in the 13.5 m band instead

of 12 m. For this reason, BT in 12.0 m is also flagged as a missing value for composite pixels originating from those

four satellites. The surface type information is retrieved from the International Geosphere-Biosphere Programme (IGBP)

map [19], with the addition of snow and ice flags taken from the NOAA daily snow and ice cover maps at 1/6 deg spatial

Table 1. List of parameters included in global composite.

Parameter AVHRR

MODIS

GEOs

1 Latitude

2 Longitude

3 Solar Zenith Angle

4 View Zenith Angle

5 Relative Azimuth Angle

6 Reflectance in 0.63um 0.63 um 0.63 um 0.65 um

7 Reflectance in 0.86um 0.83 um 0.83 um —

8 BT in 3.75um 3.75 um 3.75 um 3.9 um

9 BT in 6.75um — 6.70 um 6.8 um

10 BT in 10.8um 10.8 um 10.8 um 10.8 um

11 BT in 12.0um 12.0 um 11.9 um 12/13.5

12 SW Broadband Albedo

13 LW Broadband Flux

14 Cloud Phase

15 Cloud Optical Depth

16 Cloud Effective Particle Size

17 Cloud Effective Height

18 Cloud Top Height

19 Cloud Effective Temperature

20 Cloud Effective Pressure

21 Skin Temperature (retrieved)

23 Surface Type

24 Time relative to EPIC

25 Satellite ID

Global

Composite

± 3.5 hours maximum

from IGBP + snow/ice flags

Figure 1. Global composite map of the brightness temperature in channel 10.8 m generated for Sep-15-2015

13:23UTC. The continuous coverage leaves no gaps and apparent disruptions in the temperature field.

resolution. The time relative to EPIC observation is the t from Equation (1) but converted to seconds and stored as a

signed integer number. Satellite ID is an integer number unique for each satellite which is designed to indicate the

origination of a given composite pixel.

A typical result of the compositing algorithm is presented in Figure 1, which shows an example of the map of brightness

temperature in channel 10.8 m composited for Sep-15-2015 13:23UTC. The composite image presents a continuous

coverage with no gaps and no artificial breaks or disruptions in the temperature data. A corresponding map of satellite

ID is shown in Figure 2, which represents typical spatial coverage from different satellites. Most of the equatorial

regions are covered by GEO satellites, while the polar regions are represented by Terra and Aqua, because MODIS was

initially given a higher F

resolution

factor than AVHRR. Similarly, the MET-7 data were assigned a lower F

resolution

factor

in order to correct for their lower quality, and therefore the MET-7 coverage automatically shrinks and is more likely to

Figure 2. A map of satellite coverage in a global composite generated for Sep-15-2015 13:23UTC.

Figure 3. A map of the time relative to nominal for the case of Sep-15-2015 13:23UTC. Pale colors correspond to a

lower difference in time and bright colors indicate a larger difference.